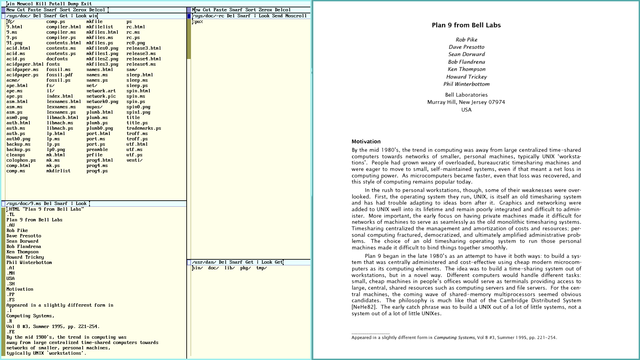

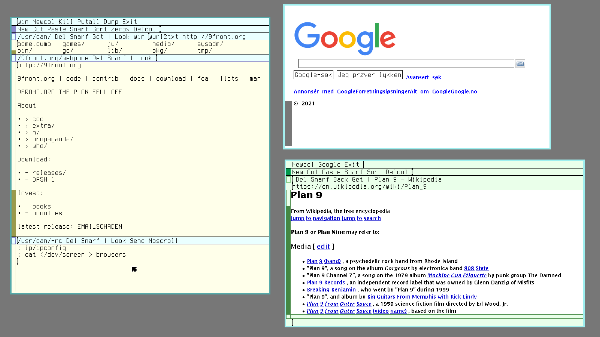

Briefly, Plan 9 from Bell Labs is a computer operating system developed by the original UNIX design team. After decades of work on Research UNIX in the late 80's, the team decided to write a new operating system from scratch, Plan 9 was finally released in 1992, and a few years later they released yet another operating system called Inferno, which share many of the same characteristics as its sister project. These systems, and variations thereof, have more or less been in continual development since. The history and design philosophy behind these operating systems, is interesting, but we will not talk about that here. Instead, this article will focus on the practical aspects of using Plan 9 as day-to-day desktop system.

Beware that prior exposure to UNIX is a double-edged sword. There are similar sounding commands and conventions between the two platforms, and Plan 9 does follow the UNIX philosophy (much more so then UNIX in fact). Nevertheless, Plan 9 is not UNIX! It is an operating system written entirely from scratch, backwards compatibility was not a goal. If you expect just another Ubuntu spin-off, you will be very disappointed. In fact, lets be clear here: You will be disappointed, period. Now with that disclaimer out of the way, lets have some fun!

In 2002 the 4th edition of Plan 9 was released, it was essentially a rolling release, that continued to receive updated from Bell Labs until 2015, when the project was officially discontinued. In mid 2021 though, Bell Labs gave ownership of all previous Plan 9 sources to the Plan 9 foundation. The goal of this foundation is to continue the development of Plan 9, but so far, not much has happened. There are several community forks around though, two of them, 9legacy and 9front, sprang into existence around 2010. If you want to use Plan 9 as a day-to-day desktop, which will be the focus of this article, I strongly recommend going with 9front. It is likely the only candidate that will actually run on your physical hardware, and it has many features that a modern user takes for granted, such as auto-mounting USB sticks, wifi support, working audio, video playback and git. 9front has an excellent fqa and community wiki, that do a far better job of presenting accurate information then I do (be prepared for quirky humor though!). Still, it can be interesting to play with 9legacy too, if only for historical curiosity, so I will give some pointers in this article on "classic Plan 9" (9legacy and the old 4th edition of Plan 9 are nearly identical), where it differs significantly from 9front. For classic Plan 9, the Plan 9 wiki from Bell Labs is a better source of documentation then the 9front resources.

More then anything, Plan 9 is a simple operating system. The kernel is only 200,000 lines of code, and the userland about a million. In comparison the source code for the Firefox web browser is more than 24 million lines of code! As you might imagine then, there are no "modern" web browsers in Plan 9. There are no office suits, triple A games, VOIP or repositories of 30,000 pre-compiled packages. Plan 9 is not for the faint of heart!

Of course there are workarounds for the above limitations, here are a few suggestions:

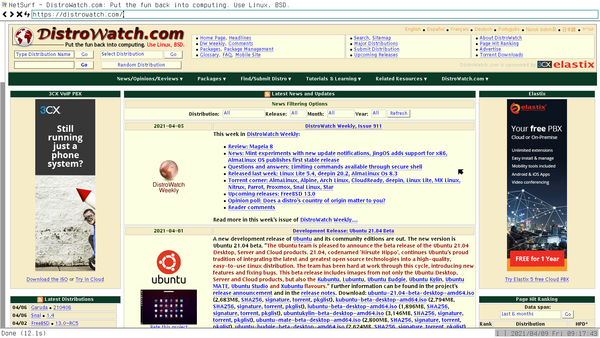

It is simple enough to connect to a remote UNIX/Windows machine from Plan 9 using VNC, or vice versa (I use the term "UNIX" broadly - it includes Mac, Android, Linux, BSD, etc...). From Plan 9 you can connect to a VNC server using vncv, or run a VNC server with vncs (there is little reason to run a VNC server on Plan 9 though, use drawterm, mentioned below, instead).

For example, assuming you have tigervnc installed on a UNIX machine, with the ip address 192.168.0.1, and a desired VNC screen resolution of 1366 x 768 pixels: You can run vncserver -geometry 1366x768 :1, and give it a login password (if you are not prompted for a password you may need to run vncpasswd first). Now, on the Plan 9 machine, run the command vncv 192.168.0.1:1, and login. By default this will probably run a very basic twm desktop, which makes many inexperienced users suspect that the desktop failed somehow. You probably want to change ~/.vnc/xstartup, to run a fancier window manager. To use openbox instead of twm for instance, add this line to the file:

exec /usr/bin/openbox-session

You can choose whatever desktop you want here, but beware that configuring xstartup gets vastly more complex if you use some bloated mess like GNOME or KDE.

It is possible to connect to a remote Windows machine using RDP, see rd if you need that sort of thing.

9front ships with a working ssh and sshfs client (sshfs mounts the remote file system in /n/ssh), but classic Plan 9 has a very outdated version of ssh, that in all likelihood will not (or at least should not) be able to connect to your UNIX machines.

It is in fact much easier to import Plan 9 technologies to foreign systems then vice versa, and there are good solutions for working with Plan 9 from UNIX. We will discuss technologies such as plan9port and drawterm later, but for now, lets talk about mounting the Plan 9 file system natively in UNIX using the 9P protocol. There are various ways you can do this, including mounting it directly, in Linux at least, like so: sudo mount -o rw -t 9p 192.168.0.1 /mnt (substitute the ip address for the Plan 9 machine you're using). But you will probably get better results using one of the many 9P clients that's out there, such as 9pfuse from the plan9port package, or 9pfs You can use it like so: 9pfs 192.168.0.1 /mnt, assuming you have the right privileges.

There is some support for NFS and SMB in Plan 9 (see nfs(4) and cifs(4)), but I don't recommend using NFS, the Plan 9 client is very outdated. Speaking of outdated, you naturally have ftpfs and telnet as well.

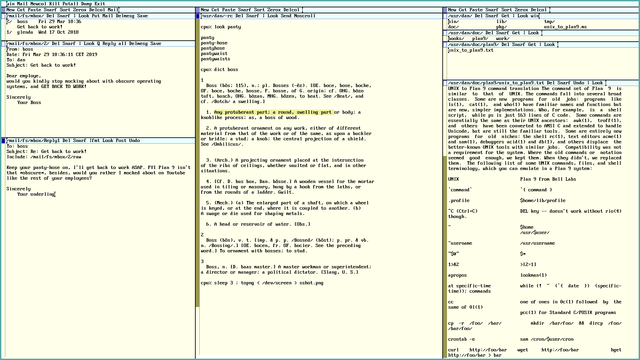

There exists a fairly good port of Plan 9 userland programs and services for UNIX, called Plan9Port (or p9p for short - a more lightweight alternative is 9base), it is available in the repositories of most popular UNIX systems. Once installed, use the 9 command to run the Plan9Port programs rather then the UNIX counterparts, eg. 9 acme. It does not fully replicate the Plan 9 experience of course, but it does make UNIX less of a pain to use.

To run a full Plan 9 shell, using Plan9Port commands instead of the UNIX equivalents, either run 9 acme, execute win in it and run 9 rc. Or run 9 9term, then run 9 rc. You can configure your ~/.xinitrc file to start the Plan 9 look-alike window manager, with exec 9 rio, and set up a very authentic looking Plan 9 desktop. But there is little point in doing so, unless you really want to hide the fact that UNIX is running in the background. Plan9Port's rio only looks like the Plan 9 window manager, but it doesn't have the same useful features, and it is quite flaky to boot. In my opinion there are far better native UNIX alternatives, including the Plan9 inspired wmii/dwm window managers, or variations thereof.

It is possible, but much harder, to go in the other direction. Plan 9 has a UNIX compatibility suit of programs and libraries in /bin/ape, such as ape/sh, which gives you a ksh like UNIX shell (run vt first to emulate a VT-100 terminal). And ape/cc a POSIX compliant C compiler, with corresponding UNIX-friendly libraries. Plus a few other UNIX'y utilities. This UNIX compatibility is old and quite unmaintained. 9front has its own semi-official portability layer called npe, see the 9front porting guide for further tips.

Note however that simply having a UNIX shell, does not mean that all your shell scripts will magically work. Plan 9 has it's own version of cat, echo, ls, sed and so on. If your script uses these programs, it needs to be adapted to use the Plan 9 versions of them. As always, read the man pages carefully (no really - read them!).

Finally,

even though Plan 9 has had a very good POSIX compliance,

it's by no means certain that UNIX programs will compile.

Most will not.

The majority of UNIX software does not restrict themselves to POSIX alone,

but require large extensions.

Most of which are not supported.

For example,

Plan 9 does not have X (by default),

curses,

sockets,

numerical UID/GID's or links,

so any programs depending on such things needs to be patched and rewritten before they will work.

In practice only the simplest of programs can be ported with any reasonable amount of effort.

In a traditional Plan 9 network, one or more CPU servers are providing file and authentication services to multiple diskless workstations, called "terminals". These terminals are desktop systems connected to the CPU server. This is a bit confusing for UNIX users, so in this article we will refer to a diskless workstation as a remote desktop, and a window running a shell as a terminal, as is the custom in UNIX. If you have set up a CPU Server in Plan 9 (see section 7.5 and 7.6 in the 9front fqa - see also Quick CPU+AUTH+Qemu+Drawterm HOWTO below), either physically, or on a virtual machine, you can emulate a Plan 9 remote desktop on a UNIX/Windows machine with drawterm (for classic Plan 9 use this link). drawterm works very well, it also has access to the host file system under /mnt/term, making it easy to work on files across operating systems.

There is a 3rd party port of X for Plan 9, together with linuxemu, it can be used to run Linux software natively (see section 8.7.1 in the 9front fqa). This implementation is not perfect however, it is old and tedious to work with, and I have had little success with it myself.

There are many different virtualization solutions available for UNIX/Windows capable of running Plan 9, such as qemu and VirtualBox. Plan 9 has very limited hardware support, especially if you want to use the classic versions of this operating system. Virtualization is a practical way to eliminate such concerns.

9front also includes its own hypervisor (see section 8.7.5 in their fqa), vmx, capable of running Linux, OpenBSD, allegedly Windows, and plausibly other operating systems. PS: You need modern Intel hardware for this to work.

I assume you have already downloaded and installed Plan 9, either on a physical machine or on a virtual one. If not you can get the 9front iso, and follow the installation instructions in section 4 of their fqa. Again, this is not a guide for installing and configuring a Plan 9 system, use the 9front fqa for that. Our focus here is doing day-to-day tasks after the initial setup is done.

PS: This is also the subject of section 8 in the 9front fqa - Using 9front. This article simply repeats and expands upon some of that content.

PS: If you want to install 9legacy, it follows much the same steps as 9front, but here are a couple of tips: After hitting Return at the "Location of archives [browse]:" prompt, you will see /%, just type exit to continue the installation. Choose plan9 when asked to "Enable boot method", otherwise just follow the defaults and choose "y" at yes or no prompts. Finally: when installing 9legacy in qemu, be sure to set the virtual harddisk as the first disk drive, eg. qemu-system-x86 -m 2G -hda 9legacy.img, do not use -hdd or similar, otherwise boot setup will fail during installation.

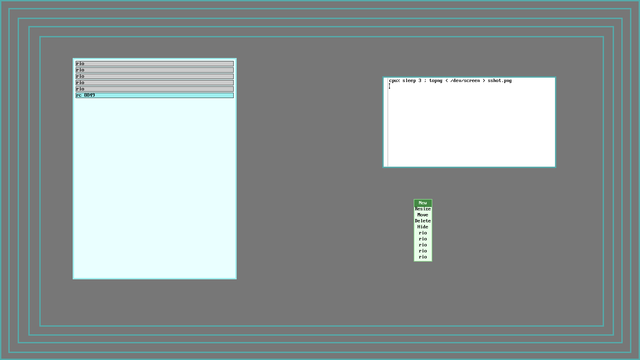

The window manager in Plan 9 is called rio, it provides a remarkably clean and simple desktop, somewhat akin to twm in UNIX. Unlike twm though, it doesn't look like crap by default, and the source code is only 6000 lines of code, which incidentally is also about the same size as Plan 9's graphical library, libdraw. In contrast twm's source is closer to 30,000 lines, and the X Window System backend, more then 8 million!

Window management is straight forward: rio provides only one menu, which you can access by right clicking the mouse on the desktop background. Hold down the mouse button while you are selecting a menu option, and release the mouse button only after you have made your choice. To create a new window, which is always a terminal, choose New. The mouse pointer changes to a cross. Right click in a corner and drag the mouse, a red rectangular box appears, release the mouse button when the window has the size you want.

If you choose the Delete option in the rio menu, the mouse pointer changes to a cross with a circle. Right click on the window you wish to delete. If you Hide a window, it will appear in the rio menu, select it from the menu to make it visible again.

You can also Resize and Move a window by using the rio menu, but it's easier to click and drag the windows directly: To resize a window, left click the blue border and drag, to move it, right click and drag.

Right clicking in a terminal window will also bring up the rio menu, but other programs will not necessarily do so. If you need to access the window manager menu while running a fullscreen acme window for instance, you must first shrink the window or move it out of the way, and then right click the gray rio background. By default there are no key-bindings to control rio, you can only do so using the mouse (What?!? Mouse actions are required?!? I know right, Plan 9 is so radical - take a look at the workspaces section below though).

In order to use Plan 9 effectively, you need a 3-button mouse. Such mice are quite common nowadays, with the scroll wheel doubling as the middle mouse button (for laptops I recommend ThinkPads). The 3 mouse buttons, and combinations of clicks, are used throughout Plan 9 for manipulating text. If you don't have a mouse with 3 buttons, you can simulate the middle click by holding down the Shift key and right clicking. But this will quickly become tedious, so go out and buy a 3-button mouse ASAP.

You can select text in the normal way, by left click and drag. You can also double left click a word to select it. If you double click the end of a line, the whole line will be selected, and if you double click a parenthesis, or square bracket or some such delimiter, the text inside these parenthesis will be selected.

To cut the selected text, hold down the left button and click the middle mouse button. To paste the text, click the left button and while holding it down, click the right button. To "copy" text, left click and middle click, release the middle mouse button and click the right button. Such combinations of mouse clicks are called mouse chording. They are used very consistently in Plan 9 programs, and feel intuitive enough once you get the hang of it.

There are only a handful of programs in Plan 9, they are simple to learn and work very well. Some essential applications are:

You do not have to play around much in the Plan 9 terminal before you realize that it works quite differently from UNIX. One surprise is that terminals do not auto scroll, if you cat a very long file for instance, it will just display the first screenful of text, and wait for you to manually hit PgDn or the down arrow key. This behavior is actually very convenient, since it removes the need for pagers. But sometimes it can cut against you. If you're compiling software for instance, the compilation will stop once the text has filled the screen, and only continue if you manually scroll down. Clearly, this is not what you want! Middle click the terminal window and select scroll, it will now auto scroll, just as UNIX terminals do. You can go back to the default behavior again, by middle clicking and selecting noscroll.

Another annoyance might be that there is no Tab auto-completion. Don't worry, use Ctrl-f instead, it does much the same thing. There is no advanced auto-completion of program names and flags, like zsh and fish users might be accustomed to. But this really isn't an issue since Plan 9 has virtually no programs or flags to speak of, as you will discover soon enough.

The third thing you may notice is that the terminal text can be freely edited. You can add any text anywhere and copy paste the text arbitrarily, the Plan 9 terminal thus feels much more like a text editor then a UNIX terminal (a consequence of this free-form text editing is that the mouse cursor has to be put at the end of the last line in order to execute a command with the Return key, otherwise it will just add a literal newline to the text - this is only mildly annoying once you get used to it). What's the point of this novel design? First of all it eliminates a host of special purpose programs that UNIX requires, for example there is no clear command in Plan 9, you just cut the text. There is no reset or readline either, as they are not needed. Secondly, once learned, this behavior feels very intuitive. Why shouldn't you be able to cut and paste text and freely sprinkle your terminal output with random comments? Going back to a UNIX terminal, after having spent some time in Plan 9, really feels like leaving the 90's - and going back to the 70's (fun tip: check out /bin/hold to see how a basic text editor in Plan 9 can be written in just five lines of shell script!).

Lastly, there is no history command in the Plan 9 terminal, hitting the up arrow key on the keyboard will just move the pointer one line up, like any text editor would. - What else did you expect? Relax though, you can rerun the previous command with "" (" will reprint it).

Hang on! The command "", isn't double quotes used for quoting?!? Not in Plan 9, double quotes are just ordinary characters. Whereas UNIX has three escape characters, Plan 9 has only one, the single quote (well, OK, backslash is also used in some situations). The UNIX command "$message has a literal \$ and ' sign", would be ''$message' has a literal $ and '' sign' in Plan 9 (two single quotes within single quotes is interpreted as one literal single quote).

PS: " and "" are actually shell scripts, provided by 9front, classic Plan 9 systems do not have these.

Back to our topic of rerunning commands, note that the need to auto-complete text and rerun commands are much greater in UNIX then in Plan 9. It is easy to copy paste text in the terminal, so use that functionality for what it's worth! You don't need to use insane syntax like ls !$ to run ls with the previous arguments, or ^foo^bar to spell correct the last argument and rerun it. Just type ls in the terminal and copy paste the previous arguments, and if you need to spell correct the last argument, then just do so, copy paste the result when you're done. There is also a full copy of the terminal text in /dev/text. So the command cat /dev/text > transcript is essentially the same as script in UNIX, > /dev/text is basically clear, and the command grep '^; ' /dev/text the same as history (assuming of course that your shell prompt is ; ). Note that you can search this log for other things then just your previous commands, and you can manipulate this data in many other interesting ways as well. For example, need to do advanced searching or manipulation of the shell history? Just open /dev/text in a text editor, eg. sam /dev/text.

But what if you want a system wide history log for all of your windows? There is no such file in Plan 9, but it's easy enough to make one. For example, the following script will save your command history to a central file. Only unique commands are saved, if we saved all of the text, our central history file would grow extremely large. For example, it would be quite redundant to have ten thousand entries of cd in our history log, not to mention hundreds of copies of the manpages and text files we have been reading.

#!/bin/rc

# savehist - prune and save command history

# usage: savehist

# set some defaults

rfork ne

temp=/tmp/savehist-$pid

hist=$home/lib/text

touch $hist

# rewrite history

cat <{grep '^; ' /dev/text} $hist | sort | uniq > $temp

mv $temp $hist

With this in place we can run savehist before exit to save our current history, or we can wrap these steps into one by adding something like this to our $home/lib/profile: fn quit{ savehist; exit } (PS: Don't call this function exit unless you really want a fork bomb!)

In addition to /dev/text you also have /dev/snarf, which holds the "snarf" buffer, the clipboard in Plan 9 speak (if you want to write to the window, use /dev/cons). All of these files refer to your current window, if you want to use these files for a different window, see the rio scripting section below.

The graphical desktop runs "within" the text console in Plan 9, so writing to the system console will actually print the text verbatim onto the screen. For example, running sleep 600; echo Bug Me! > '#c/cons' will send a fairly obtrusive notification to your screen in 10 minutes. This can be a bit disconcerting for a beginner, but it's easy to redirect such messages if you don't want them to clutter up your screen. Just run cat /dev/kprint in a window and hide it. See the rio scripting section below, for some ideas on how to avoid or abuse this functionality further.

The acme text editor is arguably the main user application for Plan 9, it doubles as the systems file manager, terminal, mail reader and more. It can even be used as a fully fledged window manager, by replacing rio with acme in your $home/lib/profile (but I don't recommend it - you will not be able to run any other graphical programs - then again, why would you want to?).

Let's do a whirlwind tour of acme: The first blue row contains commands for the entire acme window, such as Exit, if you middle-click this button, acme will exit. Dump will create a file called acme.dump, this can be used to save a particular window arrangement, and restored with acme -l acme.dump. Putall will save all modified text files.

If you middle-click Newcol a new column will appear. The column has it's own row of commands, in the second blue row. Delcol will delete the column. Cut, Paste and Snarf (eg. "Copy"), will do text manipulations. But it's easier to use mouse chords for this: Left and middle-click to Cut, Left and right-click to Paste, and finally Left and middle-click, then right-click to Snarf, or Copy. The mouse chords are awkward to explain, but try it out, it will feel very intuitive with a little practice.

Middle-clicking New will create a new window in the column. Again, it too, will have it's own row of commands. Del will delete the window. The window is initially empty, try writing some random text into it. You will see that a new command appears, Undo (it's meaning should be obvious). After typing in some text, you can also hit the Esc key to mark the recently added text, hitting Esc again, will cut the text. How do we save our file? First we need to give it a name: Click on the far left side of the menu, left of Del, and type /usr/glenda/testfile (glenda is the default user in Plan 9, and /usr/glenda is the default home directory). Yet another command will appear, Put, middle click it to save your work. That was a lot of typing! Isn't there an easier way to do this? Sure, remember that Plan 9 allows you to copy paste pretty much anything. Find the directory you want in a terminal, with Ctrl-f auto-completion and everything, then print the directory name with pwd, and just copy paste that into the acme window, and append your new filename. Easier yet, run touch testfile; B testfile from a terminal and the file will be opened for you in acme.

By now you will have noticed a very unique feature of acme, it's menus are pure text. The "buttons" are just regular words. To illustrate: Type Del (case sensitive!) somewhere in the yellow text window, then middle click it. The window will disappear. Del is just a command, same as echo or cat. Another test: Type echo hi there and middle click, and drag, so that the red mark covers all three words. hi there will be printed in a new window.

You can use the Look command to search for words in the window. Type monkey a couple of times in the yellow text window, now type Look monkey in the blue window menu, and middle click and drag, to mark the two words. The first occurrence of monkey will be highlighted, run the command again, and the second occurrence will be highlighted, and so on. An easier method however would be to just right-click the word monkey, anywhere in the text, the next occurrence of the word will be highlighted, and the mouse pointer will be moved there. Just right-click again to see the next occurrence of the word, and so on.

The Zerox command in the column menu will duplicate a window, this is very useful if you are editing a long file, and you need to see or edit different parts of the file at the same time, any changes made in one window will appear in the other. Sort will sort the column windows by name, it does not sort the content of the windows. To do that, mark the text, type |sort in the window menu, and middle-click it. As you can see, you can freely use arbitrary Plan 9 commands to manipulate the text in acme.

If you want to do search and replace operations, use the Edit command. This command is a back end for the sam text editor, which uses much the same text editing commands as ed (which again is similar to sed or vi). For example, double click one of the monkey words to highlight it, then type Edit s/monkey/chimpanzee/, and middle click and drag to execute this command. The highlighted word will be changed to chimpanzee. To change all the occurrences of monkey, type Edit ,s/monkey/chimpanzee/g (in vi this would be :%s/monkey/chimpanzee/g).

Side note: Although the above ed style substitution works in sam, sam is not a line-based editor like ed, and a more proper sam command for the above would be: Edit ,x/monkey/c/chimpanzee/ (that is: for each /monkey/ change to /chimpanzee/). To read the sam tutorial, run: page /sys/doc/sam/samtut.pdf

acme lacks many built-in features that a UNIX user might expect, but you can create much of this functionality simply by piping the text through standard utilities. Here are some examples:

Open a New window and type in the filename /usr/glenda to the far left, then type Get to the far right, right of Look, and middle click it. The contents of the /usr/glenda directory will fill the window. If you right-click on a directory in this window, the contents of that directory will be opened in a new window. To do operations on files, just type a command and execute; for example type rm before testfile, and middle click the two words to remove this file. If you right-click a text file, the contents of that file will be opened for editing in acme.

Exactly what happens when you right-click something in acme, depends on the word you click. For example clicking on the word /usr/glenda/pictures/cirno.png, will open this picture in the image viewer page, and clicking jazz.mp3, will start playing the audio file with play. Provided of course that the files in question exist on your system. The last example also assumes that the jazz.mp3 file is located in the same directory as the one you launched acme from, if not you need to specify a correct file path. The actual work of connecting the right words to the right programs is handled by plumber, which we will talk about later, but for now it's enough to know that right clicking a filename anywhere in acme will usually just "do the right thing" (you'll note though that actions are evaluated for words, not files).

Each window has a dark blue square to the far left of the menu, you can click and drag this box to resize or move the window to another column. The columns themselves also have a dark blue square, click and drag this to resize or move the column.

You can also right-click on the dark blue window square, to hide all the column windows except that one, left-click on it to bring the windows back. Left-clicking on the square will increase the window size a little, middle-clicking will maximize the window.

Left-clicking on the scroll bar will scroll upwards, right-clicking downwards. Clicking towards the bottom of the scroll bar will scroll a lot, clicking towards the top will only scroll a little. Middle clicking will transport you directly to that portion of the file. Play around and experiment with these mouse actions, pretty soon you will get the hang of it. Other Plan 9 applications with scroll bars work the same way (in 9front at least).

rio does not have virtual workspaces per se, but 9front includes some tools that let you set up a keyboard driven desktop with pseudo-workspaces. For instance, you can add the following snippet to your $home/lib/profile:

fn workspaces{

</dev/kbdtap riow >/dev/kbdtap |[3] bar

}

You can now run workspaces and switch to a new "workspace" with Win+n (where Win is the Windows key, between the left Ctrl and Alt keys, and n is a number between 0 and 9). You can also move windows about with Win+Arrow, or resize them with Win+Shift+Arrow (see riow(1) and bar(1) for more info). Classic Plan 9 does not have these tools, although there is an old fork of rio called rio-virtual, that does include virtual workspaces. There are also ways to create such services without the 9front extensions: You'll note that all windows in all riow "workspaces" are listed in the rio menu and can be unhidden. This is because riow doesn't actually add workspaces as such, but rather provides a clever way of hiding and unhiding groups of windows, and thus gives you the illusion of workspaces. For a similar, but more simplistic, way to do this see the rio scripting section below.

It is actually quite easy though to manually create pseudo-workspaces in rio: Just create a new terminal window and run plumber ; rio. This will run a rio desktop in this window (plumber is not required here, but it will make sure that files automatically opened will only be opened in this isolated rio and not outside of it). You can maximize this "virtual workspace" and do your work, hide this window when you want to go back to your first workspace, then switch back to it by selecting the hidden window in the rio menu. You can have as many of these workspaces as you like, and you can run rio inside rio inside rio ad infinitum... To organize this mess a bit you can also manually label your workspaces. Lets say you have 4 rio workspaces hidden in the background, the rio menu will just list them as: rio, rio, rio, rio. That's not very helpful. By running grep rio /mnt/wsys/wsys/*/label you will see what window id these workspaces have. You can then redefine their label, eg. echo -n workspace1 > /mnt/wsys/wsys/3/label. The rio menu will now list this window as "workspace1", instead of "rio".

Another simple workspace solution is drawterm. Once a Plan 9 CPU server (see section 7.5 and 7.6 in the 9front fqa, and the Quick CPU+AUTH+Qemu+Drawterm HOWTO section below) has been configured, you can connect as many drawterm clients to it as you wish (all of the workspace related tricks mentioned above will also work from within drawterm).

First of all, acme is a tiling window manager. Just maximize the editor and do your stuff.

Secondly, you can use your rio startup file, $home/bin/rc/riostart, to automatically set up a desktop that suits your needs. For example, if you have a 1366x768 screen, you can add these instructions to add an acme window to the left half of the screen, and a terminal window on the right half:

window 0,0,683,768 acme

window 683,0,1366,768

Unlike UNIX, graphical programs executed in a Plan 9 terminal will not launch a new window, rather, the terminal will morph into this new program. In other words, running the PDF/Image viewer page, or the web browser mothra in a terminal for instance, will in no way effect window placement. So having an initial window placement that works on your desktop, will significantly reduce the need for automatic window tiling. But if you need that, take a look at the rio scripting section below.

We have already seen brief mentions of the Plan 9 plumber a few times in this guide, but lets take a closer look. The plumber is essentially a simple inter-process messaging system. It lets you define a set of actions based on text patterns given to it. For instance, in the system wide plumber rules in /sys/lib/plumb/basic, you will find the following section:

# open urls with web browser

type is text

data matches 'https?://[^ ]+'

plumb to web

plumb client window $browser

This rule is very simple: If the message is text (it's always text), if it matches "http://" or "https://" something or other, define it as "web" related, and launch a new program, "$browser", with the given text as arguments. So in effect, whenever an URL is sent to the plumber, it opens it up in your default web browser. So, right clicking http://9front.org in acme will open up that web page, likely in mothra. You can also run the command plumb http://9front.org in a terminal, for the same effect.

You can define your own rules too. A common thing to do is to edit the editor and browser variables to modify the default text editor and web browser. I also wrote my own simple Epub reader, and added these lines to $home/lib/plumbing, in order to always open Epub files with my custom reader:

# change default apps

editor = acme

browser = 'mothra -a'

# open epubs with custom script

type is text

data matches '([a-zA-Z0-9_\-./]+).(epub|EPUB)'

arg isfile $0

plumb to image

plumb start window eread $file

# load default rules

include basic

The epub rule here adds a check to see if the given argument is an existing file, if it is $file is set to this filename, but the logic is otherwise much the same as the above URL rule. The final include basic line at the end is important, without it you would loose all the default system plumbing rules!

Plumbing rules are not restricted to file suffixes. Suppose you are reading through several documents at the moment, and you want to bookmark these to keep track of your reading progress. The solution is simple, write a database, lets call it $home/lib/bookmarks, with content similar to this:

# work stuff

/usr/glenda/doc/papers/lengthy.pdf!123

/usr/glenda/doc/papers/plain.txt:206

# plan 9 stuff

/sys/doc/9.ps!3

/sys/doc/acme/acme.ps

acme(1)

# fun stuff

/usr/glenda/doc/books/peter_pan.txt:/Chapter 2/

/usr/glenda/music/podcasts/bsdnow/acdecc6a-f7b7-4d64-b64d-f7be713b78e2.mp3

Right clicking on any of these lines in acme, will open up the file with an appropriate program. page for PDF's and postscript files, play for audio files, and plain text files directly in acme. But the default plumbing rules allow you to be even more specific then that. Piping something like lengthy.pdf!123, will not only open the PDF in page, but also on page 123. Plain text files can also be addressed, such as plain.txt:206 for line 206 of that file, or peter_pan.txt:/Chapter 2/ to open up our Peter Pan book and look for the text string "Chapter 2". Usually such textual plumbing rules are used when programming, to open a source file on the offending line by right clicking a diagnostic message for instance, but we can also used them to keep track of ourselves.

Speaking of which, lets look at one more example of how we can modify plumbing rules to suit our workflow. in the PIM section below, I mention a script called que, which iterates over a list (a queue), by printing the next line in the file whenever you run que on it. Lets assume we have a list called $home/lib/que/peterpan with the following content:

/usr/glenda/doc/books/peter_pan.txt:/Chapter 1/

/usr/glenda/doc/books/peter_pan.txt:/Chapter 2/

/usr/glenda/doc/books/peter_pan.txt:/Chapter 3/

...

Now, each time we run que $home/lib/que/peterpan, it will tell us what chapter to read next in our book. And sure enough, we can right click this output in acme to open up the book in the right place (since "Chapter x" contains whitespace we need to right click and drag to mark the whole line). But that is waaay too much work for a lazy pants such as myself! What I really want is just to add something like this to my bookmark database:

/usr/glenda/lib/que/peterpan:que

Right click this in acme, and have it automatically call que and open up the right chapter for me. As it turns out, such automation is easy-peasy, I just need to add this plumbing rule to my $home/lib/plumbing (and update my rule set with the command: cp $home/lib/plumbing /mnt/plumb/rules):

# plumb the next item in a queue file

type is text

data matches '([a-zA-Z0-9_-./]+)(:que)'

arg isfile $1

plumb to none

plumb start rc -c 'plumb `{que '$file'}'

This rule checks if the plumber received "something_something:que", and that the first argument (excluding the :que) was a real file. We are not interested in opening this file, so we plumb it to "none", and then we run our shell command plumb `{que $file}. Of course our queue doesn't need to be plain text chapters, they could be PDF's with page numbers or sequential audio files in a podcast, or what have you.

We can abuse the plumber in all kinds of fun and potentially destructive ways. It basically allows you to define any text pattern and connect that to any command. Even if you don't go bananas with this, it is an eye opening experience to read /sys/lib/plumb/basic and realize just how simple inter-process messaging can be!

To shutdown or reboot a Plan 9 system, you can use the fshalt and reboot commands. If you are using a remote Plan 9 desktop, such as drawterm, it is safe to just kill the application directly. The remote desktop is stateless, and thus shutting it down will in no way effect the host file system. In fact, the system is designed to run a CPU server 24/7, connected to diskless clients where the users do their actual work. Probably because of this design, Plan 9 file systems do not traditionally try to recover from a power loss, so don't pull the plug on your file server!

For cpu servers, using tried and true file systems is not a bad idea, but for laptops with a high risk of power loss, it might be wise to use the new GEFS (A Good Enough File System) filesystem from 9front, which does a decent crash recovery, with plans of self-healing and other nifty stuff in the offing. (of course, this is still no a substitute for backups):

PS: fshalt does not work right in qemu if you use classic Plan 9, such as 9legacy. In such cases you should write your own shutdown script, like so (note: this is not an issue in 9front):

#!/bin/rc

# halt - shutdown file server

# usage: halt

echo fsys main sync >>/srv/fscons

sleep 5

echo Its now safe to turn off your computer

echo fsys main halt >>/srv/fscons

To monitor your remaining battery, memory usage, ethernet traffic, system load and other resources, you can use the stats and memory commands. Simply cat'ing around in /dev will also provide much system information, for instance cat /dev/kmesg is essentially equivalent to dmesg in UNIX. There is also limited support for suspend and hibernate if you add the apm0= value to plan9.ini (see section 9.2.3 in the fqa and apm(8)). Don't expect this to work though, ACPI and APM is a hairy business!

PS: memory is just a simple shell script in 9front that cat's /dev/swap and reformats the values in more human readable form, classic Plan 9 systems do not have this script.

Speaking of not working, battery monitoring usually doesn't in my experience (to check if it works on your box, just run stats, right click and add battery). And unless you are very lucky, plugging in a headset will not automatically redirect audio output either. I had both problems on my cheap Acer laptop (note to self: only buy ThinkPads from now on). The last issue will be revisited in the audio section below, as for battery monitoring, a very simple workaround is to run sleep 7200; echo Warning: batteries about to go out! > '#c/cons'. Assuming that your computer has 2 hours (7200 seconds) and 15 minutes of battery capacity, and you run this command when you know that the machine is fully charged, you will get notified 15 minutes before your battery runs out.

The main problem with this elegant solution, is that it does not work at all if you expect to reboot your computer at some unknown point in the future. I find that this is frequently the case when I am traveling, and need battery monitoring the most. So I need a way to start a 2 hour countdown that persists across reboots, this script does the trick:

#!/bin/rc

# batt - print estimated remaining battery power

# usage: [battery=min] batt [-]

#

# bug: the script doesn't actually know anything about your battery,

# the user is required to run batt - initially to set a timer.

# set some defaults

rfork e

if(~ $battery "") battery=120 # hardware dependent

capa=$battery

batt=$home/lib/battery

stat='Battery at %p%% estimated remaining time: %r min'

mesg='Your battery is about to run out!'

ping=$home/media/music/samples/mario.mp3

# parse arguments

switch($#*){

case 0

if(! test -f $batt){

echo 'batt: countdown hasn''t started, run batt - first!' >[1=2]

exit notstarted

}

used = `{cat $batt}

pros = `{echo 100 - ($used^00 / $capa) | hoc | sed 's/\..*//'}

remn = `{echo $capa - $used | hoc}

echo $stat | sed -e 's/%p/'$pros'/' -e 's/%r/'$remn'/' -e 's/%%/%/'

case 1

# -, start or continue a countdown

if(! test -f $batt) echo 0 > $batt

while (sleep 60) {

date > $home/lib/end

used = `{echo `{cat $batt} + 1 | hoc}

if (test $used -ge $capa) {

echo $mesg >'#c/cons'

if(test -f $ping) play $ping >[2]/dev/null

rm -f $batt

exit

}

echo $used > $batt

}&

case *

echo 'Usage: [battery=min] batt [-]' >[1=2]

exit usage

}

You'll note that this simple countdown script measures time in minutes (120, not 7200), the main reason for this crude measurement of time is battery related, if we counted every second, the script would be 60 times harder on our battery. Anyway, using this script you can start a countdown when you know the battery is fully charged with the command batt - (or battery=80 batt - or whatever to set a countdown other then the default 120 minutes). Once that daemon has started, run batt to get an estimated remaining time of juice. But here comes the clever part: After a reboot run batt - to continue a battery countdown! In fact, you can fully automate this step by adding something like this to our $home/lib/profile:

battery=80 # default battery capacity

if (test -d /mnt/term/dev){

# do drawterm stuff

...

}

if not {

# do non-drawterm stuff

if(test -f $home/lib/battery) batt -

...

}

Don't let the boilerplate here scare you. If you don't use drawterm, just add if(test -f $home/lib/battery) batt -, and you're done (but you probably don't want to mess with battery stuff if you are using drawterm, for obvious reasons). This command simply checks to see if the file that the batt daemon uses to measure the battery countdown exists. Since our batt script removes this file once the countdown has expired, it knows that an unfinished countdown was in progress before the last reboot, and so it respawns the daemon. This is also a convenient place to set your default battery capacity. Of course, you could just edit the batt script, but if you are using this on multiple laptops, setting such a value in $home/lib/profile might be more practical.

Finally, to know when the laptop is done recharging from a depleted battery, just measure the time it takes in Ubuntu, or other suitable grandma distro, and set an appropriate timer in Plan 9. We could also wrap this up in a simple script that interrupts a battery countdown and cleans up the temp file:

#!/bin/rc

# recharge - estimate when battery is recharged

# usage: recharge

slay batt | rc

rm -f $home/lib/battery

sleep 1800

echo 'Battery is fully charged!' > '#c/cons'

Our script is quite unintelligent, but in my opinion it is a nice example of how you can create fairly useful and simple workarounds on UNIX-like operating systems, even when they lack vital features. (it's probably a good idea to use the crash resistant GEFS filesystem on laptops too - in case our makeshift battery script doesn't provide enough warning)

Plan 9 has no /etc directory like UNIX, instead it is configured through a small handful of files. The most important of which is probably $home/lib/profile, the user startup file. This is where you customize your user specific settings, it is somewhat analogous to ~/.profile in UNIX, but more important since desktop and shell are much more integrated in Plan 9. Personally I like to add this line to my lib/profile: . $home/lib/aliases, which enables me to add custom aliases to this separate file, while keeping only system related configurations in lib/profile. But that is just a matter of taste.

Beware that the settings in $home/lib/profile needs to cater to very different situations! Whether you are booting a CPU server, a standalone "terminal", or a diskless one, or are logging in through a remote connection (rcpu or drawterm for example), they all read lib/profile, but often need different customization's. The moral is, be careful when editing your profile, hubris cause debris.

The kernel configuration is in the plan9.ini file, which resides in a special boot partition. To read the contents of this partition you must first run 9fs 9fat (for classic Plan 9 run 9fat:), you can then read this file in /n/9fat/plan9.ini (note: like all Plan 9 commands this manipulates the namespace of your current process, so you will not see this file in other processes). It is by editing this file that you configure your system to run as a CPU server or terminal, you may also need to tweak some hardware specific values here. See plan9.ini(8) and section 3 of the fqa.

Network configuration is handled in /lib/ndb/local, with additional related files in that directory. But you don't need to mess around with this file if you just want to quickly connect to the internet on a laptop (see section 6 in the fqa). Mail configuration is handled by a number of files in /mail/lib (see section 7.7 in the fqa).

Lastly there is also a desktop specific startup file in $home/bin/rc/riostart, which is useful for specifying what programs and windows to auto launch, it is discussed in the tiling windows section of this article.

The rio window manager is painstakingly crafted with love and care to look as boring as humanly possible. This is important - a distraction free environment is a productive environment. But it is possible to install 3rd party patches that let you customize the rio theme and set a wallpaper, if you really crave such frippery (this will not work for classic Plan 9 however):

# install rio-themes

; bind -ac /dist/plan9front /

; cd /sys/src/cmd/rio

; hget https://ftrv.se/_/9/patches/rio-themes.patch | patch -p5

; mk install

; reboot # or otherwise restart rio

# write a theme, eg. in $home/lib/theme/rio.theme

# ps: wallpaper must be in the plan 9 image format,

# eg. jpg -9t <pic_1920x1080.jpg >$home/lib/1920x1080.img

rioback /usr/glenda/lib/1920x1080.img

back f1f1f1

high cccccc

border 999999

text 000000

htext 000000

title 000000

ltitle bcbcbc

hold 000099

lhold 005dbb

palehold 4993dd

paletext 6f6f6f

size 000000

menubar 448844

menuback eaffea

menuhigh 448844

menubord 88cc88

menutext 000000

menuhtext eaffea

# use your theme (add it to riostart if you want)

; window 'cat $home/lib/theme/rio.theme > /mnt/wsys/theme;

sleep 0.5;

grep softscreen /dev/vgactl >> /dev/vgactl;

echo hwblank off >> /dev/vgactl'

PS: Although the rio window manager works great, it is getting a little long in the tooth. There have been several attempts at extending or rewriting it over the years. The most promising variant in my opinion is lola. Please do test it if you're curious, it's a new project, and the developer would appreciate your feedback.

For better or worse, computing is an English affair. I'm sorry, but if you want to program and use any operating system in any professional capacity, you need to learn the English language. Nearly all vital documentation, and any defining works in programming, computer science and computing history, will be written in this language. I don't mean to be unsympathetic here, I am not a native English speaker myself, so I know that this can be a tall order, but that's just the way it is.

Having that said, technically speaking, Plan 9 does have very good internationalization support. Of course, all of the instructions given during installation, and all of the available documentation is in English. But the system itself supports most languages as everything is Unicode throughout.* So as long as you have the necessary fonts installed, you can read and write any language (well, languages that aren't written from left to right will require some work). UTF-8 was in fact invented by the Plan 9 developers! For example, to write the Northern Norwegian sentence "Æ e i Å æ å" (yes, this is a real sentence* ), type Alt+Shift+a+e e i Alt+o+Shift+a Alt+a+e Alt+o+a. A list of the international characters available with the Alt key combo, can be found in /lib/keyboard. So to find out how to write a smiley face in Plan 9, just type grep ☺ /lib/keyboard (naturally the ☺ can be copy pasted), and it will print:

263A :) ☺ smiley face

That is, type Alt+:+) to produce the Unicode character 0x263A, aka a smiley face. You can change the default US qwerty layout with the kbmap command, right click on the layout you want, then type q to quit. To set this change permanently:

# change dvorak to whatever layout you prefer

# setting layout in 9front:

; 9fs 9fat

; echo 'kbmap=dvorak' >> /n/9fat/plan9.ini

# setting layout in classic Plan 9:

; sam $home/lib/profile

# add the following line somewhere near the top

; cp /sys/lib/kbmap/dvorak /dev/kbmap

Modifying an existing keyboard layout is fairly easy, just copy the layout in /sys/lib/kbmap and open it in a text editor. The fields are "layer" (normally 0 for direct key press, 1 for Shift+Key or 4 for Ctrl+Key), keycode, character (eg. 'M for the letter M, ^M for Carriage Return). Writing a layout from scratch is more work, but doable. (see kbdfs(8) for more info)

As touched upon in the previous section, you can choose a Non-Latin keyboard layout, such as ru (Russian) or fa (Farsi, ei. Persian). Sometimes though, you may need to work with a language other then your input language of choice. For example, I use English as my day-to-day system language, but every so often I need to work with some Russian or Persian text. (my wife is Russian/Ukrainian and I've dabbled a bit with Persian as a hobby) I guess I could learn to touchtype the Russian keyboard layout, but for now it's too much of a hassle. I could type in Russian words using Alt key combos, eg. Alt+@P Alt+@r Alt+@i Alt+@v Alt+@e Alt+@t would spell Привет.* But that is a lot of typing! We could make a more convenient tool with sed:

#!/bin/rc

# cyr2utf - convert ASCII Cyrillic representations to UTF-8

# usage: cyr2utf < in > out

# bugs: uses arbitrary non-standard conventions

# use lat2utf if you need to *mix* Latin and Cyrillic!

#

# guide:

# Most of the Cyrillic letters are mapped to their vocalization

# equivalents in the Latin alphabet, eg: A, B, V, G, D, E, Z, K, L, M,

# N, P, R, C/S, T, and F map perfectly to А, Б, В, Г, Д, Е, З, К, Л, М,

# Н, П, Р, С, Т, and Ф. I, J, O, U, H and Y are fairly close to И, Й,

# О, У , Х and Ы. A few letters are mapped to Cyrillic letters that

# look like the Latin letters, such as X, Q and W correspond to Ж, Ц

# and Ш.

#

# Finally, some Cyrillic letters must be written by an escape '\'

# character followed by one or two Latin letters, such as \E, \:E/\JO,

# \Q, \W, \JU, \JA, \h/\hard and \s/\soft corresponding to: Э, Ё, Ч, Щ,

# Ю, Я, ъ and ь.

sed -e 's/\\E/Э/g' \

-e 's/\\e/э/g' \

-e 's/\\:E/Ё/g' \

-e 's/\\:e/ё/g' \

-e 's/\\JO/Ё/g' \

-e 's/\\Jo/Ё/g' \

-e 's/\\jo/ё/g' \

-e 's/\\Q/Ч/g' \

-e 's/\\q/ч/g' \

-e 's/\\W/Щ/g' \

-e 's/\\w/щ/g' \

-e 's/\\JU/Ю/g' \

-e 's/\\Ju/Ю/g' \

-e 's/\\ju/ю/g' \

-e 's/\\JA/Я/g' \

-e 's/\\Ja/Я/g' \

-e 's/\\ja/я/g' \

-e 's/\\hard/ъ/g' \

-e 's/\\h/ъ/g' \

-e 's/\\soft/ь/g' \

-e 's/\\s/ь/g' \

-e 's/A/А/g' \

-e 's/a/а/g' \

-e 's/B/Б/g' \

-e 's/b/б/g' \

-e 's/V/В/g' \

-e 's/v/в/g' \

-e 's/G/Г/g' \

-e 's/g/г/g' \

-e 's/D/Д/g' \

-e 's/d/д/g' \

-e 's/E/Е/g' \

-e 's/e/е/g' \

-e 's/Z/З/g' \

-e 's/z/з/g' \

-e 's/K/К/g' \

-e 's/k/к/g' \

-e 's/L/Л/g' \

-e 's/l/л/g' \

-e 's/M/М/g' \

-e 's/m/м/g' \

-e 's/N/Н/g' \

-e 's/n/н/g' \

-e 's/P/П/g' \

-e 's/p/п/g' \

-e 's/R/Р/g' \

-e 's/r/р/g' \

-e 's/S/С/g' \

-e 's/s/с/g' \

-e 's/C/С/g' \

-e 's/c/с/g' \

-e 's/T/Т/g' \

-e 's/t/т/g' \

-e 's/F/Ф/g' \

-e 's/f/ф/g' \

-e 's/I/И/g' \

-e 's/i/и/g' \

-e 's/J/Й/g' \

-e 's/j/й/g' \

-e 's/O/О/g' \

-e 's/o/о/g' \

-e 's/U/У/g' \

-e 's/u/у/g' \

-e 's/H/Х/g' \

-e 's/h/х/g' \

-e 's/Y/Ы/g' \

-e 's/y/ы/g' \

-e 's/X/Ж/g' \

-e 's/x/ж/g' \

-e 's/Q/Ц/g' \

-e 's/q/ц/g' \

-e 's/W/Ш/g' \

-e 's/w/ш/g'

With such a tool in place we can write a Russian greeting with: echo Privet | cyr2utf. Of course languages are complicated. A person who only knows a single language may naively believe that two dictionaries, one in each language, and a word-for-word look up is all that is needed for translation. That is not the case, two languages never overlap perfectly. Neither do alphabets. So our cyr2utf tool is not a magic bullet, you need to have a passing familiarity with the Cyrillic alphabet, and learn some idiosyncrasies of the script to boot. But for someone who needs to type Russian text occasionally, the script can be a timesaver.

For Persian, the above comments are also true, but more so. In addition to being a weird alphabet (from a Westerners perspective), it is written from right to left. Plan 9 does not have fribidi, but we can write a poor mans equivalent in awk. With that in place we can also write a sed translator:

#!/bin/rc

# bidi - print bidirectional text

# usage: bidi [files...]

# bugs: tabs are statically changed to four spaces

# text alignment requires a fixed width font

# set some defaults

rfork e

if (~ $#* 0) files=/fd/0

if not files=($*)

# print bidirectional text

sed 's/ / /g' $files | awk '

BEGIN { FS = "" }

{ row[NR] = $0

if (length() > max) max = length()

} END {

for(r=1;r<=NR;r++){

split(row[r], l)

line = ""

for(i=length(l);i>0;i--) line = line l[i]

printf("%" max "s\n", line)

}

}'

#!/bin/rc

# far2utf - convert ASCII Persian representations to UTF-8

# usage: far2utf < in > out

# bugs: basic implementation, non-standard conventions

# annoyingly, an initial alef must be typed \i, not i

# depend: fribidi

#

# guide:

# Most of the Persian letters are mapped to their vocalization

# equivalents in the Latin alphabet, eg: b, p, t, s, j, c, x, d, r, z,

# f, q, k, g, l, m, n, v/w, y map more or less to ب, پ, ت, س, ج, چ, خ,

# د, ر, ز, ف, ق, ک, گ, ل, م, ن, و and ی. While some letters must be

# escaped, such as \h, \j, \sh, \se/\th, \sd, \ta, \zl, \zd, \za, \e

# and \q for ح, ژ, ش, ث, ص, ط, ذ, ض, ظ, ع and غ.

#

# The alef ا, hamza ء, tasdid ّ and sukun ْ are written as i, \g, \w

# and \o, since they look similar to these Latin letters. An initial

# alef (آ) is written \i. The three vowel indicators are a, e, o for

# َ, ِ, ُ. Letters with a hamza should be written \xg, eg. \ig for أ.

# Standalone madda, "flying" hamza and under hamza (\m, \fg, \ug) do

# not have a practical use. The tavine nasb (ً), jarr and raf signs

# are \tn/\n, \tj and \tr. Kasida is \-. The rare characters ة, إ and

# ڤ are written \h:, \iu (or i\ug) and \b.

#

# Note that و must be written as v or w, since o is used for ُ, and

# that ی must be written y, not i (ا) or j (ج). Unfortunately,

# "alternative" s/z/t letters must be written as three digits (\xx).

sed -e 's/\\ig/أ/g' \

-e 's/\\vg/ؤ/g' \

-e 's/\\wg/ؤ/g' \

-e 's/\\yg/ئ/g' \

-e 's/\\hg/ۀ/g' \

-e 's/\\h:/ة/g' \

-e 's/\\fg/ٔ/g' \

-e 's/\\ug/ٕ/g' \

-e 's/\\iu/إ/g' \

-e 's/\\g/ء/g' \

-e 's/\\i/آ/g' \

-e 's/\\m/ۤ/g' \

-e 's/\\n/ً/g' \

-e 's/\\tn/ً/g' \

-e 's/\\tj/ٍ/g' \

-e 's/\\tr/ٌ/g' \

-e 's/\\w/ّ/g' \

-e 's/\\o/ْ/g' \

-e 's/\\-/ـ/g' \

-e 's/\\b/ڤ/g' \

-e 's/\\h/ح/g' \

-e 's/\\j/ژ/g' \

-e 's/\\e/ع/g' \

-e 's/\\q/غ/g' \

-e 's/\\se/ث/g' \

-e 's/\\th/ث/g' \

-e 's/\\sh/ش/g' \

-e 's/\\sd/ص/g' \

-e 's/\\ta/ط/g' \

-e 's/\\zl/ذ/g' \

-e 's/\\zd/ض/g' \

-e 's/\\za/ظ/g' \

-e 's/i/ا/g' \

-e 's/a/َ/g' \

-e 's/e/ِ/g' \

-e 's/o/ُ/g' \

-e 's/b/ب/g' \

-e 's/p/پ/g' \

-e 's/t/ت/g' \

-e 's/s/س/g' \

-e 's/j/ج/g' \

-e 's/c/چ/g' \

-e 's/x/خ/g' \

-e 's/d/د/g' \

-e 's/r/ر/g' \

-e 's/z/ز/g' \

-e 's/f/ف/g' \

-e 's/q/ق/g' \

-e 's/k/ک/g' \

-e 's/g/گ/g' \

-e 's/l/ل/g' \

-e 's/m/م/g' \

-e 's/n/ن/g' \

-e 's/v/و/g' \

-e 's/w/و/g' \

-e 's/h/ه/g' \

-e 's/y/ی/g' \

-e 's/1/۱/g' \

-e 's/2/۲/g' \

-e 's/3/۳/g' \

-e 's/4/۴/g' \

-e 's/5/۵/g' \

-e 's/6/۶/g' \

-e 's/7/۷/g' \

-e 's/8/۸/g' \

-e 's/9/۹/g' \

-e 's/0/۰/g'

We can now type echo slim | far2utf | bidi, and it will produce مالس.* Unfortunately our simple bidi code does not work perfectly, it does not reshape the Persian letters so that they blend together. (it would be like reading "H E L L O" instead of "Hello", it's intelligible but ugly) To do so we would have to write a more sophisticated bidi implementation in C. Of course, you could also convert it with fribidi if you have a UNIX box handy. Once converted correctly, Plan 9 will display the text just fine.

Now, playing with Non-Latin alphabets in the terminal is one thing, but if we plan to work seriously with this, we need to add such text to a document. For Russian, that's easy peasy, just write it directly in troff. (more on that in the writing documents section below) For Persian though it's more tricky. Plan 9's version of troff does not support left-to-right input. (neither does it include Persian fonts) The easiest way to do this is again to have a UNIX box at the ready. You can install neatroff and read the short getting started document. Of course, you can still write the ms files in Plan 9 and display the PDF's that neatroff generates.

Now I'm sure Russian, Persian, not to mention Norwegian, documents are totally irrelevant for you, but that's not the point. These are merely examples. The point is that you can work with Non-English languages in Plan 9. And more broadly, even when Plan 9 does have limitations, you can usually work around them with an alternative OS at the ready.

The method of adding a new user to Plan 9 varies depending on what file system you use. To illustrate, we can add a new user called bob, that is a member of the email (upas) and admin groups (adm for user administration, sys for access to system files), to various Plan 9 filesystems:

# add user to a gefs file server

; mount /srv/gefs /adm adm

; sam /adm/users # edit by hand

; echo users >> /srv/gefs.cmd # refresh user db

; mount /srv/gefs /n/u %main # create user dir

; mkdir /n/u/usr/bob

; chgrp -u bob /n/u/usr/bob

; chgrp bob /n/u/usr/bob

# optionally, set tmp so it doesn't take automatic snapshots

; echo snap -m main other >> /srv/gefs.cmd

; echo snap other retain '' >> /srv/gefs.cmd

; mount /srv/gefs /n/o %other

; mkdir -p bob /n/o/usr/bob/tmp

; chgrp bob /n/o/usr/bob^('' /tmp)

; chmod 700 /n/o/usr/bob^('' /tmp)

# add user to a hjfs/cwfs file server

# (replace hjfs.cmd with cwfs.cmd for cwfs server)

; echo newuser bob >> /srv/hjfs.cmd

; echo newuser upas +bob >> /srv/hjfs.cmd

; echo newuser adm +bob >> /srv/hjfs.cmd

; echo newuser sys +bob >> /srv/hjfs.cmd

# add user to the auth server (on any filesystem)

; auth/keyfs

; auth/changeuser bob

; auth/enable bob

The new GEFS file system can be a little confusing if you are used to the old way of doing things in 9front, the Version Control section below might help explain what's going on. (and naturally, read the gefs(8) manpage) If you are using a classic Plan 9 system, use fscons instead of hjfs.cmd/cwfs.cmd, and the command uname rather then newuser, but otherwise it's the same as hjfs/cwfs. The very first thing Bob needs to do when he first logs in to the Plan 9 box, is to type /sys/lib/newuser. This will create an initial home directory with basic files such as a lib/profile and a tmp directory. Why doesn't the system do this by default? Consider it a security feature, users who aren't able to type /sys/lib/newuser, have only limited access to the system in order to protect the other users. Btw, you may wish to add the new user to secstore as well (see section 7.4.3.1 in the fqa).

Security in Plan 9 is built around an astute observation; While it's the operating systems responsibility to secure the digital world (ei. the network), it is your responsibility, as a physical being, to provide physical security. Like me, being a scrawny nerd, you may find that statement disconcerting. Relax, don't get buffed, get smart: For example, if a Plan 9 network of multiple diskless terminals, is serviced by a single file server, that isn't also a CPU server; The only practical way to compromise file security on that network, is to gain physical access to the file server machine. The sysadmin can lock this machine behind a server room door, behind a death-ray enhanced mutant shark pool, or whatever physical restraints his evil boss may fancy.

The user who boot's a machine has physical access to it. This hostowner owns all the resources of that machine, but how much power that gives him on the network depends entirely on how the network is configured. A Plan 9 machine that isn't a CPU server, cannot be logged into remotely, a machine that isn't a file server, cannot export its files, and a machine that isn't an auth server, cannot authenticate remote transactions. In practice though, a 9front user will typically set up his laptop as a self contained CPU+AUTH+File server, in which case the hostowner is nearly as powerful as the Almighty root in UNIX. (although he must still show ostensible respect for file permissions) Single-user "terminals" on the other hand, where originally diskless, and do not export any resources whatsoever. Thus they have nothing to secure and Plan 9 will let anyone login to such a machine without a password. This is not ideal today, when a default Plan 9 installation provides a "terminal" with local disk storage. There are a few ways to work around this issue: 1) Configure the system to run as a CPU+AUTH server, which does require a password to login. 2) Configure the BIOS to set up a boot password. 3) 9front allows you to encrypt the harddisk, requiring a passphrase to log in (see section 4.4 in the fqa).

To demonstrate some multiuser shenanigans:

# UNIX friendly aliases

fn su{

rcpu -u $*

}

fn chown{

chgrp -u $*

}

; su bob # switch user on CPU server

...

ERROR ERROR ERROR # Oops, bobs profile is missconfigured

...

# for CWFS just change hjfs.cmd to cwfs.cmd

; echo allow >> /srv/hjfs.cmd # fs hostowner: allow chown

; chown glenda /usr/bob/lib/profile

; B /usr/bob/lib/profile # fix the problem

; chown bob /usr/bob/lib/profile

; su bob

# GEFS works a little differently

; mount /srv/gefs /n/u %main # permissive mount point

; chown glenda /n/u/usr/bob/lib/profile

...

There is no df command in Plan 9 for measuring disk usage, but you can get that information in other ways. On an hjfs file system run this command: echo df >> /srv/hjfs.cmd. On cwfs and gefs the method is a bit awkward, you must first write a command to the file server, and then read from the control file, eg: echo statw >> /srv/cwfs.cmd && cat /srv/cwfs.cmd, will give you a bunch of statistics for cwfs, currently using 16 Kb file system blocks (hit Del when you are done) What you probably want is the last digit in the wmax line, which will tell you how much percentage of the disk you are using (the cache line here is also important, the cache is only 1/5 the size of the main storage area, but if it runs out of space - you will run into problems!). gefs works similarly, but gives you less statistics. Here is a crude df script for 9front that you may find useful:

#!/bin/rc

# df - print disk usage

# usage: df

if (test -f /srv/hjfs.cmd)

echo df >> /srv/hjfs.cmd

if (test -f /srv/gefs.cmd) {

echo df >> /srv/gefs.cmd

dd -if /srv/gefs.cmd -bs 1024 -count 12 -quiet 1

}

if (test -f /srv/cwfs.cmd) {

echo statw >> /srv/cwfs.cmd

dd -if /srv/cwfs.cmd -bs 1024 -count 21 -quiet 1 |

grep wmax | sed 's/.*\+//'

}

I think the method is similar to this in classic Plan 9, but I am not exactly sure how to do this (feel free to drop me a line if you know how, or detect any other deficiencies in my article for that matter). For individual files and folders you can of course use the trusty old du command to measure their size. Here is a simple and handy script that lists the files and folders in your current directory sorted by disk usage:

#!/bin/rc

# dus - disk usage summary for current dir

# usage: dus

du -s * | sort -nrk 1 | awk '{

if ($1 > 1073741824) printf("%7.2f %s\t%s\n", $1/1073741824, "Tb", $2)

else if ($1 > 1048576) printf("%7.2f %s\t%s\n", $1/1048576, "Gb", $2)

else if ($1 > 1024) printf("%7.2f %s\t%s\n", $1/1024, "Mb", $2)

else printf("%7.2f %s\t%s\n", $1, "Kb", $2)

}'

Of course, the whole point of Plan 9 is to centralize all the disk management into one system, and access that from whatever devices you may be using on your network. So invest in big disks and take the time to install a file server, and don't ever worry about disk management again. (given that the 72 members of the old UNIX crew managed to gobble up "34000 512-byte secondary storage blocks" in 5 years, a Terabyte harddisk should, mathematically speaking, hold you for 22 million years)* If you need to set up a complicated file server, you can investigate the installer scripts an /bin/inst, to get a feel for how you would go about doing such a setup manually. You can also check out the adventuresin9 YouTube channel, which has many good videos on this and other sysadmin subjects. If you absolutely have to use Sneakernet, check ot the USB sticks section below.

Plan 9 file systems all have snapshot capabilities, so as long as the file system itself is in working order, you can restore damaged or lost data without much hassle. Of course, there is a big if here: The file system can get damaged, and the machine it runs on can get damaged, and the building it lies in can get damaged, and the country it lies in can get damaged, and the world it lies in... you get the picture. So even if you have a super sophisticated ultra safe file system with all the trimmings, it is not safe! You should backup your data to an offsite location, preferably two offsite locations: If an intruder compromises the data at one site, having two backups lets you verify which data is accurate and which is corrupt.

The trick to migrating from the concept of backups to the practice of it, is two fold. First, backups must be takes automatically. Doing backups manually ensures that they don't get done. Secondly, only backing up essential files will dramatically increase cost effectiveness. If you are an organized individual, just write a proto(2) file for your important files, and schedule a regular mkfs(8) job with cron(8). I however, am not an organized individual. My first problem is that I boot my laptop only semi-regularly, so I need some easy way to schedule a job "at least" once a day/week/month; If a weekly job hasn't been run for a week or more when I boot my box, it needs to run again. Here is a simple script that accomplishes this:

#!/bin/rc

# schedule - run commands at scheduled intervals

# usage: schedule

# depend: window schedule in $home/bin/rc/riostart

#

# format: add commands to run in one of the following

# files in $home/lib; daily, weakly, monthly.

# set some defaults

rfork e

lock=$home/lib/lock

mkdir -p $lock

date=`{date}

datesec=`{date -n}

weekrun=Mon

daily=$home/lib/daily

weekly=$home/lib/weekly

monthly=$home/lib/monthly

# check monthly scripts

if(test -f $monthly){

lockfile=monthly_$date(2)^_$date(6)

if(! test -f $lock/$lockfile){

rm -f $lock/monthly_*

touch $lock/$lockfile

@{rc $monthly}

}

}

# check weekly scripts

if(test -f $weekly){

lockfile=weekly_$datesec

if(! test -f $lock/weekly_*) touch $lock/$lockfile

oldlockfile=`{ls -p $lock/weekly_*}

olddatesec=`{echo $oldlockfile | sed 's/weekly_//'}

oldweeksec=`{echo $olddatesec + 604800 | bc}

olddaysec=`{echo $olddatesec + 86400 | bc}

# by default run weekly scripts on a certain day,

# but make sure it runs at least once a week.

if(~ $date(1) $weekrun || test $datesec -gt $oldweeksec){

# also make sure it doesnt run twice in a single day

if(test $datesec -gt $olddaysec){

rm -f $lock/weekly_*

touch $lock/$lockfile

@{rc $weekly}

}

}

}

# check daily scripts

if(test -f $daily){

lockfile=daily_`{date -i}

if(! test -f $lock/$lockfile){

rm -f $lock/daily_*

touch $lock/$lockfile

@{rc $daily}

}

}

# respawn shell

rc

The script works by writing "lock" files with dates attached whenever a scheduled job is executed. If these dates are older then a day/week/month (feel free to expand the script to include quarterly/semily/yearly run jobs if you wish), the job is executed again and the lock files are updated. Exactly how you want to run schedule depends on your needs and tastes, but one suggestion is to add window schedule to $home/bin/rc/riostart.

Now, to tackle my second problem: Just as time management in my life is disorderly, so are my files. I know I have important stuff lying around somewhere that I need to backup, but it's too much hassle finding out where. Doing a full backup however is vastly inefficient, since my home directory contains some non-textual nonsense. What I need is some quick way of saying backup everything, except this and that. Here is one suggestion:

#!/bin/rc

# nom - no match, print all files except those given

# usage: nom files...

rfork ne

temp=/tmp/nom-$pid

fn sigexit{ rm -f $temp }

if(~ $* */*){

echo 'nom quitting: can''t handle ''/''s.' >[1=2]

exit slash

}

ls -d $* > $temp

ls | comm -23 - $temp

exit # force file cleanup

#!/bin/rc

# backup - backup important files to offsite storage

# usage: backup

rfork ne

# backup semi-important files

mkdir -p /tmp/backup

fn copy{

mkdir -p $2

if (~ `{ls -ld $1} d*){

mkdir $2/$1

dircp $1 $2/$1

}

if not cp $1 $2

}

fn sigexit{ rm -rf /tmp/$backup /tmp/backup }

cd $home

for(file in `{nom bin doc games jw media pkg site tmp})

copy $file /tmp/backup

cd $home/bin

for(file in `{nom 386 amd64})

copy $file /tmp/backup/bin

cd $home/doc

for(file in `{nom books health os papers})

copy $file /tmp/backup/doc

backup=9front-^`{date -i}^.tar.gz

tar czf /tmp/backup /tmp/$backup

cd /tmp

# PS: The first whitespace in sed here is a tab

md5sum $backup | sed 's/ / /' >> CHECKSUM

# copy backup to offsite locations

fn sshcopy{

sshfs $1

if(! test -d /n/ssh/backup) {

echo Error: SSH failed!

exit ssh

}

cp /tmp/$backup /n/ssh/backup

cat /tmp/CHECKSUM >> /n/ssh/backup/CHECKSUM

}

sshcopy bkpserv1

sshcopy bkpserv2

exit # force file cleanup

Now the script here is very much tailored to my own idiosyncratic needs, so don't just copy paste it! For example, I omit some big directories in $home, such as media, where I pub all of that non-textual mess, and site where I keep my web site. I do copy bin and doc, but only parts of them. Clearly, such details, will not be relevant for your setup. But I hope the example might inspire you to write a useful backup utility yourself. With these tools in place, I can just add backup to $home/lib/weekly, and a weekly ~10 Mb backup of my ~10 Gb* used diskspace is automatically taken, if I happen to boot my laptop at least once a week. Of course, it's still useful to have a full tar czf $home /n/ssh/backup/9front-full.tgz backup lying around, but running that command manually once or twice a year suffice for my needs.

PS: If you happen to be a ZFS user, you may be yawning right about now. ZFS does indeed have many fancy features that the Plan 9 file system lacks, but in my humble opinion, the practicality of these features are overrated. For good data security you need two offsite backups even with ZFS, and with such a setup, additional data integrity and redundancy is somewhat overkill. Data compression, not to mention deduplication, is even less relevant. With Terabyte harddisks on commodity hardware nowadays we have infinite disk space, infinite +50% extra is still infinite. Besides, if space were really such a premium, redundancy would be evil. In any event, if you want self healing and all that jazz in Plan 9 - and don't want to wait for GEFS to catch up - just backup your files to a UNIX machine using ZFS (or better jet, run Plan 9 virtually from a UNIX machine using ZFS).

ZFS primer for non-ZFS systems:

snapshots: yesterday

integrity: md5sum myfiles.tar.gz >> CHECKSUM

redundancy: cp myfiles.tar.gz /n/ssh/backup

compression: gzip myfile

encryption: auth/secstore -p myfile

replication: tar xzf myfiles.tar.gz

deduplication: <buy a disk man>

self healing: tel mysysadmin/man gefs

Plan 9 does not really have package management facilities in the sense that a UNIX user would expect. The system is intended to be "fully-featured" (albeit minimalistic) and few 3rd party software exists, those that do tend to be distributed as plain source code requiring the user to compile them manually. It has been toyed with some package management solutions for Plan 9, but for the most part Plan 9 users usually just compile what they need by hand. Here are a few examples to demonstrate what "package management" may entail in Plan 9:

PS: When compiling software in a Plan 9 terminal, remember to middle click the window and select scroll. Otherwise the compilation will freeze once the output has reached the bottom of the window (this is a "feature", not a bug).

; sysupdate # download latest sources

; cd / # rebuild system

; . /sys/lib/rootstub

; cd /sys/src

; mk nuke # sometimes needed after library changes

; mk install

; mk clean

; cd /sys/man # optionally rebuild documentation

; mk

; cd /sys/doc

; mk

; mk html

; cd /sys/src/9/pc64

; mk install # optionally rebuild (64-bit) kernel

; 9fs 9fat

; rm /n/9fat/9bootfat

; cp /386/9bootfat /n/9fat

; chmod +al /n/9fat/9bootfat

; cp /amd64/9pc64 /n/9fat

; reboot # if you have installed a new kernel

Instruction for kernel installation is slightly different for 32-bit or arm, see section 7.2.5 in the fqa for more info. Of course, you do not need to reinstall the kernel and rebuild the docs for every minor update, usually all you need to do is:

; sysupdate

; cd /sys/src

; mk install

; cd /tmp

; 9fs 9front # download package

; tar xzf /n/extra/src/xscr.tgz

; cd xscr # compile programs and install them

; mk

; for(f in 6.*){ mv $f $home/bin/$cputype/^`{echo $f | sed 's/6.//'} }

; cd /tmp

; hget http://vmsplice.net/vim71src.tgz | gunzip -c | tar x

; cd vim71/src

; mk -f Make_plan9.mk install

; 9fs sources # download iso and mount it

; bunzip2 < /n/sources/extra/perl.iso.bz2 > /tmp/perl.iso

; mount <{9660srv -s >[0=1]} /n/iso /tmp/perl.iso

; cp /n/iso/386/bin/perl $home/bin/386 # install the binary

; cd /tmp

; git/clone https://git.sr.ht/~kvik/lu9

; cd lu9

; mk pull

; mk install

; lu9 script.lua # or interactively: lu9 -i

; cd /tmp

; git/clone https://github.com/bakul/s9fes

; cd s9fes

; mk

; mk inst

; s9 # do some scheming

# go will only work on amd64 architecture, so if you are

# running 386, rebuilt to 64-bit first:

; cd /

; . /sys/lib/rootstub

; cd /sys/src

; objtype=amd64 mk install

; cd /sys/src/9/pc64 # build and install a 64-bit kernel

; mk install

; 9fs 9fat

; rm /n/9fat/9bootfat

; cp /386/9bootfat /n/9fat

; chmod +al /n/9fat/9bootfat

; cp /amd64/9pc64 /n/9fat

; sam /n/9fat/plan9.ini # make sure bootfile=9pc64 (not 9pc!)

; reboot # reboot to a 64-bit system, download Go stuff

# now, lets build go, we will bootstrap the latest version

# of go from 9legacy, then use that to build the go source

# (these instructions quickly get outdated):

; mkdir /sys/lib/go

; cd /sys/lib/go

; hget http://www.9legacy.org/download/go/go1.23.6-plan9-amd64-bootstrap.tbz |

; bunzip2 -c | tar x

; hget https://golang.org/dl/go1.23.6.src.tar.gz |

; gunzip -c | tar x

; mv go amd64-1.23.6