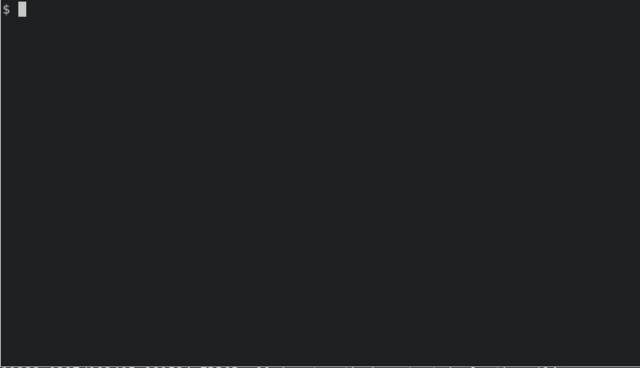

A "console" in UNIX speak (UNIX..? think Linux - best to confuse one concept at a time!) is the text-based interface, as opposed to the graphical interface (the "desktop" in people speak). The console is sometimes referred to as a "tty" (short for teletype, which is graybeard speak - the kind of people who refer to a monitor as a "glass teletype") You can change between consoles and the graphical environment by holding the keys Ctrl + Alt + Fn (eg. Ctrl + Alt + F1 will change to tty1 - the first console). Exactly how many consoles are available, and where the graphical environment is located, depends greatly on which version of UNIX you are running, and how it is configured. More on that later, but feel free to experiment! A console (technically a virtual console) is not the same as a terminal emulator, such as XTerm, which is a program you can run within a graphical environment. Neither is it a shell by the way, a shell like bash for instance runs inside a terminal emulator/virtual console. Confusion reigns supreme however since terminal emulators and virtual consoles are often just called "terminals", "consoles" or "shells" interchangeably (btw, all of them are "cli's" - command line interfaces).

If you feel confused at this point, the following analogy might help: Think of a "shell" as a language, such as English. A "console" as an oldschool office from the 50's, with only basic essentials like a typewriter and a filing cabinet. And a "desktop" as a modern office of plexiglas and shiny chrome, complete with air conditioning, a coffee machine, fancy artwork on the walls, soft ambient background music and what not. It even has some pencils and papers laying around somewhere if people get in a productive mood... Although the office environments are certainly different, and reflect different time periods, in practice the occupants often work on the same things, and of course they all use the same language.

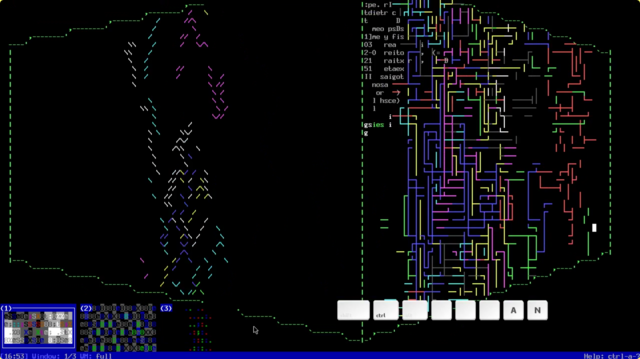

Yes. The text-based environment in UNIX is incredibly versatile and powerful, programmers and system administrators who log into a UNIX server remotely will typically work exclusively in the console, working on a remote desktop (similar to TeamViewer in Windows) is just too inefficient. But can a console really function as an everyday desktop? That is what this article will try to explore, and I think you will be pleasantly surprised at just how useful a text-based environment can be!

Useful you say? Isn't a text based environment like... boring?!? That depends. Learning to use the console certainly requires effort, just as it does to learn any language. But once mastered, you don't think much about it as either boring or fun, instead you focus on the task at hand. An article you read might peak your interest or not, but you wouldn't consider the English language itself as boring would you? Once a language is learned you don't think much about it.

PS: Don't let the term language here scare you. Learning another human language is a huge task, since it consists of tens of thousands of words and painfully convoluted grammar. In contrast if you have learned only a hundred "words" (ie. commands), you are a fluent UNIX speaker, even knowing just a dozen or so is usually enough to get by. Grammar, if at all applicable, is very simple.

Since our goal here is to illustrate day-to-day "desktop" usage, we will not go too much into system administration and programming (although we will have to dip our toes into such matters a little bit). The internet is brimming with articles on these matters, so just Google around if you need some help ;^) The article assumes you are already familiar with basic UNIX commands, and many solutions are provided as shell scripts. If you don't have the skill to read such programs, the following books may be useful additions to your bookshelf:

Despite not delving deeply into administration and programming however, we will cover much ground and therefore I try to give brief instructions. You are encouraged to read the manpages and relevant documentation yourself to get the finer details. I will start off by discussing how to configure and use the console, and introduce some basic topics. Although necessary, this first section is mindnumbingly boring, so you might want to skip ahead to the fun parts. Before we do any of that though, lets settle some ugly disputes first.

WARNING: The following section contains strong language and gratuitous violence to truth, taste and civility. Do not let children or lawyers, nor any people with weak constitution or humor read it. Feel free to send me a complaint if you feel unflamed after reading this section.

You would be surprised how often, given any topic on UNIX, the discussion is derailed at the onset by some newb asking: By the way, what distro should I use? I have heard of this thing called BSD, is that better or worse then Linux? While we are at it, what text editor would you recommend? What browser? What desktop? What programming language? And so forth... Thankfully, we are more or less avoiding that entire GUI angle in this article, so many of these questions can be safely ignored. But the first two will no doubt be asked, so I suppose we'll have to answer them.

Originally, there were two kinds of UNIX, the one created by hippies at the University of California at Berkeley, called BSD (Berkeley Software Distribution), and the one created by evil monsters at AT&T headquarters, called SysV (UNIX System 5). Our distant forefathers from the 80's fought valiantly in the UNIX wars, and many brave men and women lost their arguments in Usenet battles, as the two giants fought for supremacy. Today however, AT&T UNIX is dead. Rumor has it that some remnant babies of this corporate monster still exist in the wild, in the form of AIX, HP-UX, and Solaris, but like I said, it's just a rumor. For all intents and purposes BSD is the only candidate that remains standing, and thus wins by default (apparently some kid in Finland has created his own clone of SysV, but I can't see that going anywhere). Confusingly though, there exists several versions of BSD today. So which is the best? Well... that is a tricky question. But I suppose if we have to pick one, and assuming you aren't into merchandising or fanaticism, the popular choice would be NetBSD. It can run on all the popular platforms, such as i386, and with pkgsrc you can even compile nethack on NetBSD via the net, what more could you want?

But what if you wanted to take this new Finnish hack for a spin, there's about a thousand Linux distros out there, so which one is the right one for you? Well, using logic we can narrow it down to two candidates: If you are one of those people who hate a multitude of distros, then Slackware is clearly the right choice, I mean all the other ones are just upstarts and spinoffs, exactly the kind of thing that you hate, right? On the other hand, if you are one of those people who love a multitude of distros, then LFS is the one for you, that way you can make as many distros as you like. You see, it doesn't have to be so hard if we just put our minds to it.

PS: Realistically BSD is the only candidate here as a day to day driver, but it's fun non the less to fire up a VM and play with Linux every so often. I mean, young folks do have good ideas on occasion.

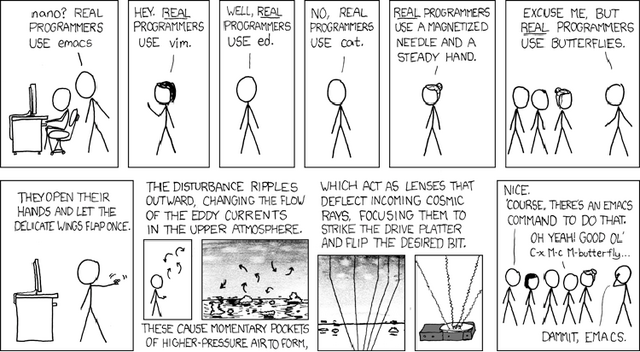

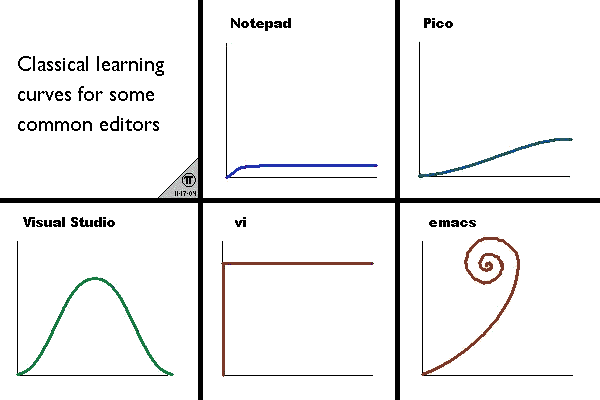

Another source of inflammatory infighting in UNIX, is the choice of text editor. There are too many popular fad editors out there to mention, and they all have nifty little features that lure unsuspecting newbies into their fold. Now, I'm not here to judge. Some people cannot read a book without pictures, and some developers cannot write code without syntax highlighting, I get it. But for the true UNIX hacker there is only one editor, only one that adheres to the UNIX principals, the official one; ed. Like all true UNIX programs ed can take its input arbitrarily from the keyboard, a file or a pipe, and behave consistently in all cases. It can work in a true textual environment, meaning on paper. Try using vi with a lineprinter as your interface! Real programmers do not need visual aids, like some invalid, they see their code in their minds! To learn how to use ed, read the manpage. "It really is that simple", to quote Steve Jobs. If you need more hand holding however, I suggest reading Appendix 1 in The UNIX Programming Environment (1984) by Kernighan and Pike, or read Ed Mastery (2018) by Michael W. Lucas. Once you've got it all down, install bsdgames and run quiz function ed-command for a nice lesson in humility.

Even though the ed manual is an easy read, there are illiterate users, some by necessity, some by choice. Fear not, nano is for you! Start nano. See the ^X Exit at the bottom? It means: Hit Ctrl-X to Exit, I'm sure you can work out the rest yourself.

PS: The BSD editor ee (easy editor) is better, since it's twice as easy to type.

Even though ed is the standard UNIX editor, vi is the popular editor for the masses. No doubt because of it's renowned user-friendliness. It has spawned many forks, the most popular one being vim (vi improved). As the name suggests, vi is a visual editor, so you can actually see what's going on. And the keybindings are mnemonic enough to closely resemble spoken English. The only thing you really need to learn in order to become a vi user, are modes. It works like this: When you start vi it opens the file in normal mode. In this mode you can move around, and do basic bulk editing on the text. Things like d3w (delete three words), yy (yank, just yank - the line) and p (paste) it somewhere. To input text, just a (append) it. To escape input mode you hit Escape of course. See, it's dead simple. Finally, vi lets you run commands that effect the whole file, things like saving and quitting or search and replace. To run one of these commands, type colon in normal mode, and issue your orders. For example, :wq (write'n quit), :q! (quit dammit!), or :%s/good/bad/g (100% of file, substitute good for bad, globally). Using vi is simply a matter of understanding what the keys mean. Small wonder it's so ubiquitous, you just can't beat the simplicity. Here is a cheat sheet to get you started.

PS: The BSD editor nvi (new vi) is better, since it's bug-for-bug compatible with vi(1).

Emacs is best summarized in the following statement: Emacs is an operating system that only lacks a good text editor. There are several forks of Emacs around, but the most popular one by far is GNU Emacs. Essentially Emacs makes this entire article redundant, anything you can to in the console, you can do in Emacs. For example, you can type M-x shell-command (that is Alt-x, then type shell-command) to run any command, like ifconfig or firefox or what have you. To run a full shell session type M-x shell (if you need to run fancy ncurses programs use M-x ansi-term).

Time does not permit me to discuss the eww web browser, gnus news and mail client (or one of the several superior candidates), erc irc client, dired file manager, calendar, ses spreadsheets, calculator, tetris, snake, dunnet text adventure, the psychoanalyzer doctor and other tools and toys that ship with the editor by default. Not to mention the thousands of 3rd party apps that can be installed with its package manager. Such as the emms multimedia system, slime for serious Lisp hacking, and org-mode for serious text hacking. Naturally any kind of file, including PDF's and images can just be opened directly.

All of the above major modes can be started with M-x modename. To open up a file you can type C-x C-f (that is Ctrl-x then Ctrl-f). These modes and files are called "buffers" in Emacs jargon, to open up a non-current buffer type C-x b. Tab-autocomplete works everywhere, so use that for what it's worth. Delete a buffer with C-x k. To quit Emacs, type C-x C-c, and to cancel an action type C-g. Emacs is also a window manager. To split the window vertically in two type C-x 2, to split it horizontally type C-x 3 (these can be arbitrarily split again and again). To close a window type C-x 0, to make a window fullscreen type C-x 1, to switch to another window type C-x o (for "other").

You can even use Emacs as a text editor. It's true! You can start the tutorial with C-h t, to learn how, but I wouldn't recommend it. The one thing Emacs does not come with by default is a decent text editor. Luckily though, you can emulate vi in Emacs. You could use viper mode in vanilla Emacs, but a better solution might be to install the 3rd party package evil. You can do so using the above mentioned package manager, or you can use one of the many user-friendly Emacs distros out there, such as Prelude, Graphene, Emacs starter kit, Spacemacs or DoomEmacs. Wait... package manager... Lisp machine... distros?!? You though I was kidding when I called Emacs an operating system didn't you? (Honestly though, Emacs is an ungainly pile of pants, but it does have one saving grace (Lisp (the ultimate language (for programming)))))))))). Btw, here is a cheat sheet to get you started.

PS: The BSD editor mg (micro gnu emacs) is better, since it's written in a higher level language.

As mentioned you normally have multiple virtual consoles available. So if you're waiting for a job to finish in tty1, you can hit Ctrl + Alt + F2 to jump over to tty2. When you want to go back to the first console again, just hit Ctrl + Alt + F1. Most systems will also allow you to scroll back the text with Shift + PgUp and Shift + PgDown, this enables you to go back and read previous output. In the following sections I will talk about how to configure fonts, colors, keyboard layouts and the consoles in general. The method of doing this varies greatly from system to system, so you don't need to read all of the subsections, just the ones that are relevant for you.

This hardware vendor deserves special mention. Now I know that many a Windows gamer out there loves his Nvidia card like his favorite pet monkey. And sure enough the Nvidia engineers can produce stunning specs, and provide hours of gaming fun like none other, but they cannot write quality software if their life depended on it! Their driver is a bad joke, and an affront to the finer feelings of system administrators everywhere. It is clear that the Nvidia developers have the Windows mindset, where it is perfectly fine, nay expected, to write binary blobs that bloat the system and boldly crash it in ways no one have crashed it before!

If you are using their proprietary driver on any version of UNIX (especially anything besides Linux), then you will likely not be able to switch back and forth between the console and your graphical environment. In fact you often cannot run multiple graphical environments simultaneously either, something that is unheard of in the Windows world, but is a given on UNIX systems. Don't be surprised either if your system suddenly freezes or crashes for no apparent reason whatsoever, it's to be expected. The only way to work around these issues is to use either the reverse engineered open source Nvidia driver, or configure X to use the generic VESA driver. In both cases you will usually have very shabby resolution, both on your desktop and on your console. Using the console with an Nvidia card may cause serious headache, but take a look at the Putting a Console on the Desktop section below for a simple workaround.

As a side note: The OpenBSD developers have embargoed Nvidia on their system. If more operating systems would follow their example, instead of showing their finger in frustration (looking at you Linus Torvalds), Nvidia might just take a hint and clean up their act.

To be fair, other vendors like Intel, have also shown a shocking lack of concern for the quality of their own product. Of course Intel's specialty is multi-processing and virtualization, not graphics, so it's in those areas that they bury their bodies. As far as graphics are concerned, Intel should not give you any problems on the console.

To be extra fair, some have objected to my objections about Nvidia. I find such criticism of my criticism surprising. Not because I might be wrong, but because people have cared enough about my blog to criticize it. I am humbled. Let me stress that I am talking about *console* issues with proprietary Nvidia drivers. Desktop graphics usually work fine. And I am sure many Linux distros handle even these proprietary drivers gracefully on the console. The fact that I have issues does not mean that you have issues. Naturally, anything you read on my blog, or the web, can be obsolete, opinionated and/or dim. Beware.

If you have a graphical desktop already running, getting to it from the console is simply a question of finding out where it is located. If you are unsure just hit Ctrl + Alt + F1, then Ctrl + Alt + F2, and so on all the way up to F12 until you hit it (it is often at F5 or F7, but it varies). Note that while some systems allow you to use the shorthand Alt + Fn, when switching consoles, you need to use the full keybinding sequence Ctrl + Alt + Fn, when switching back and forth between the graphical environment and the text consoles.

You can also start a graphical environment from a console. If no graphical environment is running, just type startx. This will launch the systems default graphical environment, which is often twm, a horribly antiquated window manager (many who see this for the first time do not realize they are running a desktop, they just assume that the computer got broken somehow). You can configure startx to run something else by adding instructions in ~/.xinitrc (that is .xinitrc in your home directory). Here is a short example that sets the keyboard layout to Italian and launches Xfce:

setxkbmap it

exec dbus-launch --exit-with-session startxfce4

PS: Getting the launch instructions right for a modern desktop, such as KDE or GNOME, can be tricky (the above Xfce instructions can also vary from system to system). If you're having problems starting them I suggest you try with a simple window manager first, such as exec openbox, to check that the actual graphical environment (normally the X Window System, sometimes referred to as X11, X.Org, or just "X") is working. If it is, you have probably misconfigured the KDE/GNOME/Xfce launch instructions, and you'll know what to Google for.

If a graphical environment is already running you need to launch your new desktop in a different virtual screen. You can do so like this: startx -- :2, the number here is arbitrary, what matters is that the screen ID number must be unique.

Multi-Monitor setups is not possible on any UNIX console. That is to say, you can have as many monitors as you like, but they will all show the same screen. Multitasking however is possible, for one, you can configure a number of virtual consoles and switch between them (see also the tmux section below). Secondly, you can have a multi-monitor setup in X, and run a "console-like" desktop, such as dwm.

Linux will usually configure a number of consoles by default, 6 consoles and the graphical environment on the seventh seems to be a popular configuration. You can configure an arbitrary number of consoles on Linux, see the next sections for further details. You can also use the short hand Alt + Fn and Alt + Arrow-Key to change consoles.

You can set keyboard layout with loadkeys, such as loadkeys dvorak.map. The keyboard maps are often located in /usr/share/keymaps or /usr/share/kbd/keymaps (PS: This approach should also work on systemd distros, but did not work for me on Debian. You could try dpkg-reconfigure keyboard-configuration instead (naturally that will only work on Debian-like distros)). You can change fonts with setfont, such as setfont -v Uni3-Terminus12x6, the console fonts are usually located in /usr/share/consolefonts or /usr/share/kbd/consolefonts.

The Linux console supports multiple colors and you can use echo to send control sequences to manipulate the console color settings. Adding this to the end of ~/.profile will make your console use the Solarized color theme:

# solarize the tty

if [ "$TERM" = "linux" ]; then

echo -en "\e]P0073642" #black

echo -en "\e]P1dc322f" #darkgray

echo -en "\e]P2859900" #darkred

echo -en "\e]P3b58900" #red

echo -en "\e]P4268bd2" #darkgreen

echo -en "\e]P5d33682" #green

echo -en "\e]P62aa198" #brown

echo -en "\e]P7eee8d5" #yellow

echo -en "\e]P8002b36" #darkblue

echo -en "\e]P9cb4b16" #blue

echo -en "\e]PA586e75" #darkmagenta

echo -en "\e]PB657b83" #magenta

echo -en "\e]PC839496" #darkcyan

echo -en "\e]PD6c71c4" #cyan

echo -en "\e]PE93a1a1" #lightgray

echo -en "\e]PFfdf6e3" #white

clear #for background artifacting

fi

Not only can the Linux console display any number of colors, but it is also capable of displaying real graphics. It can do so using the framebuffer device. In order to use it you must first make sure your user has permission to access /dev/fb0 and the files under /dev/input. This usually means you need to add your user to the groups video and input, you can do so by running this command: usermod -a -G video,input <myuser>. You also need to install the fbdev driver, if it isn't already included. The method of doing so varies from distro to distro. For instance on Slackware you can install it like so: installpkg /mnt/cdrom/extra/xf86-video/*.txz, and on Debian: apt install xserver-xorg-video-fbdev fbset gpm. Look up your distros documentation.

Two examples of programs you can use on the Linux framebuffer is fbterm and fbi. The fbterm terminal can use any fonts available under the graphical environment, which easily enables you to have support for exotic languages like Arabic or Chinese. In combination with the image viewer fbi, you can also set a terminal wallpaper (Ps: You cannot launch another framebuffer program from within fbterm, you need to do that from a regular text console).

#!/bin/sh

# fbtermbg - start fbterm with wallpaper

# usage: fbtermbg wallpaper

# depend: fbi

(sleep 1; cat /dev/fb0 > /tmp/wallpaper.fbimg) &

fbi -t 2 -1 --noverbose -a $1

export FBTERM_BACKGROUND_IMAGE=1

cat /tmp/wallpaper.fbimg > /dev/fb0

fbterm

Some framebuffer programs will hijack your screen, so that you cannot switch to other consoles or the graphical environment. Don't panic! Everything should return to normal once you have quit the program (Ps: If your console is all garbled after you have quit a framebuffer program, the reset command should fix it).

Legacy Linux systems, and a few modern exceptions, such as Slackware, Gentoo and CRUX, do not use systemd.

You can change virtual console settings in /etc/inittab. To add an eighth tty in Slackware, add this line to /etc/inittab:

c8:12345:respawn:/sbin/agetty 38400 tty8 linux

This takes effect after a reboot. In non-systemd Linux you can also switch to tty13-24 with AltGr + Fn.

Slackware boots in a text based environment by default, to change this to a graphical login, change the line: id:3:initdefault to id:4:initdefault in /etc/inittab. On non-systemd Linux runlevel 3 is text mode login, whereas runlevel 4 is graphical login. The process described here should be fairly similar to any Linux distribution that doesn't use systemd.

Most modern Linux systems, including Debian, Red Hat, SUSE, Arch, and quite a few others, use the systemd meta system daemon.

To change the number of consoles, to say 12, in Debian, uncomment NAutoVTs in /etc/systemd/logind.conf and set it to NAutoVTs=12. This will take effect after a reboot. On Linux systems with systemd, you can switch between the tty13-24 with Shift + Alt + Fn.

To configure Debian to boot into text mode instead of a graphical login, do this: systemctl set-default multi-user.target, to switch back to graphical login: systemctl set-default graphical.target. The process described in this section should be fairly similar to any Linux distribution that uses systemd.

Linux users often take a lot for granted, they tend to get horrified when discovering that a different UNIX system doesn't have some functionality they have gotten used to, or even if they just do things differently (they may even shout "this isn't UNIX!!!" without realizing the irony - it's usually Linux that's doing things in a non-UNIX'y fashion). So if you're planning to use a BSD system or some other UNIX variety, and have a Linux background, keep an open mind and be prepared to adjust your expectations!

When it comes to the console, non-Linux systems will not support graphics on the framebuffer, nor support exotic UTF-8 characters (ei. English only), and color and font support is often very limited. In fact many ncurses applications may not work well on non-Linux consoles. Some systems also restrict how many consoles you are allowed to have. If you think this all sounds horribly limiting, you probably haven't spent your youth writing PDP-11 assembly in V6 using ed. (all of these restrictions can be easily overcome though by simply running terminal emulators in a graphical desktop). Lastly, keybindings might be a little different, although Ctrl + Alt + Fn will always work.

Despite these restrictions, you can still do neat things on any UNIX console with a little know-how. The above mentioned trick to make a Solarized console theme in Linux will not work on most UNIX consoles since they don't support 256 colors, but virtually all of them have 8 color ANSI support, so you can do some basic color tweaking. Here is a simple and portable script that can set a few basic tty color schemes (unlike the Linux trick though, the themes are not preserved if you run a terminal application with colors, such as vim or less, so a better solution is to configure your system to use the colors you want - more on that later):

#!/bin/sh

# ttycolor - choose tty colors

# usage: ttycolor theme

# bugs: not persistent across colorful tty apps

#

# explanation:

# printf '\e[1m' - turn on boldface (or "light" color)

# printf '\e[4m' - turn on underscore - not used here

# printf '\e[5m' - turn on blink - not used here

# printf '\e[7m' - reverse colors - not used here

# printf '\e[0m' - turn off attributed, eg. boldface

# printf '\e[4nm' - specify background color ("boldface" has no effect)

# printf '\e[3nm' - specify foreground color, where n is:

# 0 = black 2 = green 4 = blue 6 = cyan

# 1 = red 3 = yellow 5 = magenta 7 = white

usage(){

echo 'Usage: ttycolor (geek|tron|minoca|sun|obsd|nuke|default)' >&2

exit 1

}

if [ ! $# = 1 ]; then

usage

fi

# set tty colors

case $1 in

geek) printf '\e[0m\e[32m\e[40m' ;; # green on black

tron) printf '\e[1m\e[36m\e[40m' ;; # cyan on black

minoca) printf '\e[1m\e[33m\e[42m' ;; # yellow on green

sun) printf '\e[0m\e[30m\e[47m' ;; # black on white

obsd) printf '\e[1m\e[37m\e[44m' ;; # white on blue

nuke) printf '\e[1m\e[33m\e[41m' ;; # yellow on red

default) printf '\e[0m\e[37m\e[40m' ;; # white on black

*) usage ;;

esac

clear

FreeBSD has 8 virtual consoles configured and the graphical interface on the ninth by default. Modern versions of FreeBSD use the vt(4) console driver. It allows you to configure up to 16 consoles, and switch to tty13-16 with Shift + Alt + F1 ... F4. You can also use the Alt + Fn shortcut, but not the arrow keys. To scroll backwards and read previous output hit Scroll Lock, and then use Page Up and Page Down, hit Scroll Lock again when you are done. (if your laptop doesn't have Scroll Lock you can usually simulate it in various ways, Fn + k works on my ThinkPad T430 for instance) To configure more than 8 consoles edit /etc/ttys, for instance this line: ttyv9 "/usr/libexec/getty Pc" xterm onifexists secure will enable a ninth console. To enable all 16 consoles use ttyva ... ttyvf, not ttyv10 ... ttyv15. You can tweak other console settings by adding options to /etc/rc.conf. For instance, these lines:

moused_enable="YES"

allscreens_flags="-f 8x8 /usr/share/vt/fonts/terminus-b32.fnt green"

keymap="us.dvorak.kbd"

kld_list="i915kms"

will enable the console mouse, use large green terminus fonts and the dvorak keyboard layout. Lastly it will load the Intel graphics firmware, you need to have the drm-kmod package installed for this to work (if you are using Nvidia or Radeon (AMD) you need to change this line accordingly). You can interactively select a console font with vidfont, or use the vidcontrol command to adjust all of the above settings.

Even with 16 ttys configured in /etc/ttys, FreeBSD limits you to a maximum of 12 consoles by default, to add up to 16, you need to tweak the kernel a bit:

# cd /usr/src/sys/$(uname -m)/conf

# cp GENERIC MYKERN

# sed -i '' 's/GENERIC/MYKERN/' MYKERN

# echo options VT_MAXWINDOWS=16 >> MYKERN

# cd /usr/src

# make buildkernel KERNCONF=MYKERN

# make installkernel KERNCONF=MYKERN

# shutdown -r now

DragonFly BSD uses the old sc(4) console driver from FreeBSD, but otherwise the setup is exactly the same. By the way, you don't need to tweak the kernel in order to enable all 16 consoles, like you do in FreeBSD (PS: the sc driver might have problems switching between the graphical environment and consoles).

PS: BSD systems write kernel messages to the first console. This can be quite annoying if you happen to be working on other things there, so work on any console except the first one to avoid much consternation.

OpenBSD uses the wscons(4) console driver. It requires you to switch between consoles using Ctrl + Alt + Fn, you cannot use the shorthand Alt + Fn. This is a design choice, made to avoid keybinding conflicts. There are no key bindings to switch beyond 12 consoles.

In OpenBSD you have four consoles enabled by default, with the fifth tty being reserved for the graphical environment. (if you plan on using a graphical login manager, make sure that ttyC4 is off!) Adding more consoles is done in /etc/ttys:

ttyC7 "/usr/libexec/getty std.9600" pccon0 on secure

Oh, by the way, once your editing /etc/ttys,

change the vt220 option for your active consoles to pccon0.

vt220 is a ultra-conservative safe choice,

but pccon0 has more modern capabilities,

such as unicode, colors and curses.

The 11th and 12th console must be ttyCa/ttyCb,

not ttyC10/ttyC11.

Rebooting will not activate your new consoles however, you need to "create" them first. Doing so manually is a bit tedious, instead we can recompile the kernel with some new settings:

# cd /usr/src/sys/arch/$(uname -m)/conf

# cp GENERIC.MP CUSTOM

(Assuming you are running a multi-processor kernel, if not copy GENERIC) Now open up CUSTOM in an editor and add the following lines:

option WS_KERNEL_FG=WSCOL_WHITE

option WS_KERNEL_BG=WSCOL_BLACK

option WS_DEFAULT_FG=WSCOL_GREEN

option WS_DEFAULT_BG=WSCOL_BLACK

option FONT_BOLD8x16

These settings will result in kernel messages being written in a white font, and normal text in green. We also changed the font to a smaller type, fitting more text onto our screen (you'll find a list of available fonts in /usr/src/sys/dev/wsfont/wsfont.c, if you have installed sources). Finally change the WSDISPLAY_DEFAULTSCREEN=6 to WSDISPLAY_DEFAULTSCREEN=12 in GENERIC (regardless of whether you are running a multi-processing kernel or not). Now that the customization's are done, we can recompile the kernel:

# config CUSTOM

# cd ../compile/CUSTOM

# make clean

# make

# make install

# shutdown -r now

PS: X is big, bloated and old, it is a mayor security risk! The OpenBSD developers are somewhat conscientious about security. They have therefore reconfigured X to run in user mode, denying it any direct access to the system. This is great for security, but a consequence of doing so is that you cannot run more than one graphical environment at a time. You can use Xephyr or Xnest to nest X instances inside each other though (eg. (Xephyr :2 -screen 1920x1080 &); sleep 1; DISPLAY=:2 openbox). Also the normal way of starting X in OpenBSD from the console is not with startx, but rather doas xenodm, which starts the xdm-like login manager. Finally, as mentioned OpenBSD does not support Nvidia cards. Graphics may still "work" on such cards, but you will only get generic VESA output with poor resolution.

PS: OpenBSD, like all BSD systems, write kernel messages to the first console. So it's a good idea to work on a different console to avoid interruptions.

NetBSD also uses the wscons(4) console driver, like OpenBSD. It requires you to switch between consoles using Ctrl + Alt + Fn, you cannot use the shorthand Alt + Fn.

Configuring the NetBSD console is fairly similar to OpenBSD. Add new consoles to /etc/ttys, for instance:

ttyE7 "/usr/libexec/getty Pc" wsvt25 on secure

NetBSD also has four consoles by default and uses the fifth as the graphical interface (so keep ttyE4, the fifth tty in /etc/ttys, off if you plan on using a graphical login manager!), We can change some of the kernel defaults and recompile like so:

# cd /usr/src/sys/arch/$(uname -m)/conf

# cp GENERIC CUSTOM

# vi CUSTOM

Lets tweak some color and font settings, as well as enabling 8 ttys: Change WS_KERNEL_FG=WSCOL_GREEN to WS_KERNEL_FG=WHITE, and add options WS_DEFAULT_FG=WSCOL_GREEN. Uncomment WSDISPLAY_DEFAULTSCREENS=4 and change it to WSDISPLAY_DEFAULTSCREENS=8, and add options FONT_DEJAVU_SANS_MONO12x22 (you'll find a list of available fonts in /usr/src/sys/dev/wsfont/wsfont.c). Then recompile the kernel with these changes:

# config CUSTOM

# cd ../compile/CUSTOM

# make depend

# make

# mv /netbsd /netbsd.old

# mv netbsd /

# shutdown -r now

By default the NetBSD kernel only allows you to have a maximum of 8 consoles. Enabling more than that would require some more kernel hacking (see Sidenote below), I recommend using tmux instead.

PS: NetBSD, like all BSD systems, write kernel messages to the first console. So it's a good idea to work on a different console to avoid interruptions.

Sidenote: If you absolutely have to have all 12 ttys, here is how:* Edit /usr/src/sys/dev/wscons/wsksymdef.h and add these two lines after #define KS_Cmd_Screen9:

#define KS_Cmd_Screen10 0xf40a

#define KS_Cmd_Screen11 0xf40b

Now edit wsdisplay.c in the same directory and change #define WSDISPLAY_MAXSCREEN 8 to 12. Edit wskbd.c and add these two lines after case KS_Cmd_Screen9::

case KS_Cmd_Screen10:

case KS_Cmd_Screen11:

Then we have to update the default keyboard maps for USB keyboards and PS/2. (alternatively, you can tweak /etc/wscons.conf, eg. wsconsctl -k -w map+='keycode 87 = Cmd_Screen10 f11 F11) Edit /usr/src/sys/dev/hid/hidkbdmap.c, so that it reads:

KC(68), KS_Cmd_Screen10, KS_f11,

KC(69), KS_Cmd_Screen11, KS_f12,

And /usr/src/sys/dev/pckbport/wskbmap_mfii.c, so that it reads:

KC(87), KS_Cmd_Screen10, KS_f11,

KC(88), KS_Cmd_Screen11, KS_f12,

Now, go to the CUSTOM kernel you created (see above), and change WSDISPLAY_DEFAULTSCREENS to 12, and add four new ttys in /etc/ttys. (the last two must be ttyE10, ttyE11, not ttyEa and ttyEb!) Finally, make a few device nodes and recompile the kernel and userland (sysadmin commands such as wsconsctl also need to be recompiled to work with the new code):

# cd /dev

# ./MAKEDEV ttyE8

# ./MAKEDEV ttyE9

# ./MAKEDEV ttyE10

# ./MAKEDEV ttyE11

# cd /usr/src/sys/arch/$(uname -m)/conf

# config CUSTOM

# cd ../compile/CUSTOM

# make clean

# make depend

# make

# mv /netbsd /netbsd.old

# mv netbsd /

# shutdown -r now # verify that the new kernel works

# cd /usr/src

# ./build.sh -O ../obj -T ../tools -U NetBSD-custom

# ./build.sh -O ../obj -T ../tools -U install=/

# shutdown -r now

One reason why stock NetBSD does not support the F11/F12 keys in tty switching, might be that using these keys will cause keyboard conflicts with some of its (many) supported architectures, such as dec or hpc. In theory, you can create more then 12 ttys, but tty switching is hard coded to Ctrl + Alt + ... in wscons, so it wouldn't be practical. (you can manually switch to tty 13 with wsconscfg -s 13)

Oddly enough virtual consoles have traditionally not been available in Solaris, and it does not come enabled by default in newer versions, such as OpenIndiana,* either. To add six consoles in OpenIndiana for instance, you need to run:

# svcadm enable vtdaemon

# for i in 2 3 4 5 6; do

> svcadm enable console-login:vt$i

> done

# svccfg -s vtdaemon setprop options/secure=false

# svccfg -s vtdaemon setprop options/hotkeys=true

# svcadm refresh vtdaemon

# svcadm restart vtdaemon

By default you are only allowed to activate a maximum of 6 consoles. Like Linux you can change between consoles using the Alt + Fn for ttys 1-12, and AltGr + Fn for ttys 13-24, or you can use the Alt + Arrow-Key shortcuts. To get back to the desktop hit Ctrl + Alt + F7. Not all graphical drivers will allow you to switch between console and desktop however. On one of my test machines I had to disable the graphical environment with svcadm disable lightdm and do a cold reboot. From the console I could then enable the desktop again with svcadm enable lightdm, but to get to the console again I would have to do another disable and reboot.

I was able to work around these problems by configuring X to use the generic VESA driver, but naturally this meant that I had a very poor screen resolution. Hopefully your graphic driver will be more cooperative. If not it might be better to ignore the tty consoles altogether and use terminal emulators in a graphical desktop instead. But if you really want to use the VESA graphics driver you can do so by adding this file in /etc/X11/xorg.conf.d/vesa.conf:

Section "Device"

Identifier "Card0"

Driver "vesa"

EndSection

The only way I have managed to add more then 6 consoles in OpenIndiana is the following hackish method: edit /lib/svc/manifest/system/console-login.xml as root, find the <instance name='vt6' enabled='false'> line and copy this until the trailing </instance>. Edit the copy so that it reads vt8 and /dev/vt/8, instead of vt6 and /dev/vt/6, in the three places that these values are mentioned. You can now repeat this process for vt's 9-15. Reboot and run the above svcadm commands for vt's 9-15 and you now have 14 consoles (vt7 is still reserved for the desktop). If you want more then 14 consoles you need to make more device files in /dev/vt first.

Setting the console keyboard layout, to say French, is done with kbd -s French. To choose a language interactively just type kbd -s. In theory, running this command as root should change the default console keyboard layout permanently, but due to a bug in the version of OpenIndiana I tested this on, it got reset to US qwerty on every reboot. I have yet to figure out how to adjust fonts and default colors in the Solaris console, or use the mouse and scrollback buffer (I suspect this doesn't work on all hardware).

It's somewhat of a digression, but another painful issue with Solaris systems is the lack of software. For virtually all Linux and BSD variants, most of the software mentioned in this article can just be installed with the package manager, but Solaris and Illumos repositories are much thinner. Besides manually compiling the things you need by hand (it's worse then it sounds), a nice workaround here is to install the NetBSD ports collection, called pkgsrc. It is designed to work across multiple operating systems, including Solaris. Of course, not everything will work out of the box, but it does help:

# get and bootstrap pkgsrc

$ cd /usr

$ wget ftp://ftp.NetBSD.org/pub/pkgsrc/pkgsrc-2021Q2/pkgsrc.tar.gz

$ tar xzf pkgsrc.tar.gz

$ cd pkgsrc/bootstrap

$ env CFLAGS=-O2 CC=/usr/bin/gcc ./bootstrap

$ export PATH=/usr/pkg/bin:$PATH

# install a game and have some fun

$ echo 'ACCEPTABLE_LICENSES+= nethack-license' >> /usr/pkg/etc/mk.conf'

$ cd ../games/nethack

$ bmake install clean # outside of NetBSD pkgsrc uses "bmake"

$ nethack

The procedure is much the same for whatever OS you want to use pkgsrc on. You can find some specific Solaris pointers here, although these instructions are for Solaris 9 and 10, and thus do not reflect Illumos systems perfectly.

The process, nay the art, of getting organized is a complex and abstract topic. We will not even try to present a self-help-book-like theory of how to do this! (there are plenty of those on Amazon if you are into that sort of thing) What we will do is show you the tools you need, and leave it as an exercise for you, dear reader, to figure out what to do with them. (see the pim section for more tips)

You are probably familiar with basic tools such as ls, cd, mkdir, rm, ln, pwd and so on. (if you'd like to try out something new though, you can check out eza or lsd (ls), zoxide or autojump (cd), bat (cat), ripgrep (grep) and fd (find) - you can find more suggestions here) The problem with organizing yourself on the command line though, is that your files are largely out of view. Out of sight, out of mind, as they say. You can quickly check what the filesystem looks like with tree -L 2 (or eza/lsd --tree). Another fantastic tool to quickly get an overview of your files, including their contents, is the file manager ranger (nnn and lf are similar, while vifm and mc are classic two-pane file managers). Or you could check out the newcomer fzf (fuzzy finder - it can actually plow through any list such as the command history or git commits). On the other hand, if you just need to figure out what's taking so much space on your harddrive try ncdu/dust (broot is a blend between ranger and ncdu), or simply du -hs * | sort -h.

Sometimes though you don't need to get a general overview, but find a specific file that you have lost. The quickest way to do this is locate myfile. But the locate command has a weakness; it relies on a database that needs to be updated manually (read the man page for the specifics). If the database is out of date, it may not contain the file your looking for (tip: update the locate database periodically with a cron job). Worse, you may not remember the exact name, only certain details, such as general filesize and when you last worked on it. find is your friend! This command can do some impressive file searching, here are some examples:

Search for a directory named exactly MyDiR somewhere under /some/path:

$ find /some/path -type d -name MyDiR

Search for a PDF file somewhere under the current directory that is more then 10 Megabytes in size but less then 100:

$ find . -iname *.pdf -size +10M -size -100M

Case insensitive search for a file called myfile somewhere under the current directory, modified within the last 10 days and belonging to the www group:

$ find . -type f -iname myfile -group www -mtime -10

Last but not least, don't forget the invaluable grep command. With it you can recursively search for any file containing a certain text, eg:

$ grep -R "UNIX is awesome" /some/path

A terminal can run multiple programs at once. You have probably launched programs in the background before with command &. What you may not realize is that you can easily switch programs from the background to the foreground arbitrarily. To demonstrate: Type sleep 60 & and hit Enter, three times, now run jobs, and you will see these three background processes listed. Put job number 2 to the foreground with fg 2. Your console is now unresponsive; it is busy sleeping. Hit Ctrl + z and you will suspend the process to the background again. Run jobs and notice that job nr 2 doesn't have a & sign, it is currently paused. You can continue the process in the background with bg 2.

Of course, managing multiple jobs in this way is tedious. Some times however you might find that you need to freeze the program you are currently running, and do something real quick. Well that's easy, hit Ctrl + z, do your thing, and run fg when your done. Presto! Your program resumes like nothing happened.

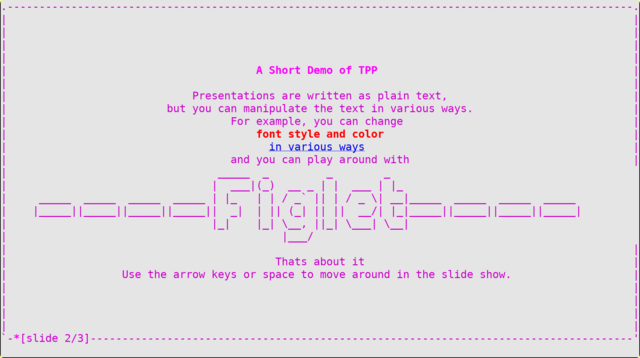

For serious multitasking on the console you need terminal multiplexers. Think of a multiplexer as a window manager for the terminal. There are many alternatives, but we will focus on tmux (The classic multiplexer screen has similar capabilities, and there are other candidates, such as dvtm, byobu and zellij).

To quickly demonstrate some of it's abilities do the following: Fire up tmux and hit Ctrl + b and then c. At the bottom line you will now see 0:bash- 1:bash*. This means that there are two bash programs running, you are now seeing the program with an asterix, 1:bash*. You can change back to 0:bash by hitting Ctrl + b and then 0, and then back to 1:bash again with Ctrl + b and then 1. But tmux can do more... Hit Ctrl + b and then %, and you will see the screen split vertically. Hit Ctrl + b and then ", and it will split horizontally. You can navigate between these window frames by hitting Ctrl + b and then one of the Arrow keys. To resize the frames, do the same, but keep holding down Ctrl as you use the Arrow keys.

If you hit Ctrl + b and then d, tmux will quit. But you haven't just exited the program, tmux is detached. If you run ps -ely | grep tmux, you will see that it is still running. You can connect to this tmux session again by running the command tmux attach. This functionality is invaluable when working remotely on a server. If the internet connection is broken when you had tmux running, just log in again and run tmux attach, and you can continue where you left off. Naturally you can have many tmux sessions running in the background, use tmux ls to list them.

There is an absurd amount of things you can do with this program. Check the tmux manpage for the gory details. You can also check the available key-bindings with Ctrl + b and then ? (the notation here can be a bit confusing though: bind-key is usually set to Ctrl + b, so bind-key -T prefix d detach-client just means "hit Ctrl + b and then d to detach-client"). PS: depending on your system, tmux is often smart enough to understand mouse gestures, such as clicking on the window you want, or click and drag to resize it, after hitting the initial Ctrl + b key combo.

The console mouse in Linux is gpm. Many distros will have this daemon enabled by default, or at least will enable it if you install gpm. In Slackware you can start the console mouse with: chmod +x /etc/rc.d/rc.gpm and then /etc/rc.d/rc.gpm start. On systemd distros you can enable it with systemctl gpm.service start. When gpm is running you should be able to see a square jump across the screen when you move your mouse, this is the mouse "pointer."

Copy pasting with gpm is straight forward. Left click and drag to mark text, any marked text is automatically copied to the gpm clipboard. You can paste it by middle clicking (if you don't have a middle mouse button click both buttons simultaneously). Why the middle mouse button?!? Actually this is the standard UNIX behavior, go ahead and try it on your desktop! The inefficient, left click + copy + left click + paste method, stems from Windows.

Pasting text straight to the command prompt is not that practical however, and opening up a text editor will clear the clipboard! So how do we copy something and save it to a file? Simple: run cat << eof > myfile and hit Enter. Now copy paste what you want, when you're finished write eof and hit Enter. The text is now saved in myfile. If you find this solution confusing, I recommend reading up on shell redirection.

Ps: BSD systems use moused as their console mouse, but it works just the same as gpm (Solaris systems don't have a console mouse AFAIK).

tmux also works great for copy pasting text, especially since you can run windows side by side and copy paste between them. But learning the keybindings will take a bit of practice. First hit Ctrl + b and then [ (ei. open bracket) to enter copy mode, in this mode you can move the cursor around freely. Navigate to the start of the text you want to copy and hit Ctrl + Space, then navigate to the end of the text (the section you want should now be highlighted) and hit Alt + w. The text is now copied to the tmux clipboard. You can paste it any time with Ctrl + b and then ] (ei. close bracket). You can manipulate the clipboard in many useful ways, read the documentation for further details.

If the above keybindings seemed weird, you're probably not too familiar with emacs. By default tmux uses the emacs style keybindings in copy mode. You can use vi style instead if you want, just add set-option -g mode-keys vi to ~/.tmux.conf. You can now copy-paste by hitting Ctrl + b and then [ to enter copy mode. Start marked text with Space, end it with Enter. Finally paste it with Ctrl + b and then ].

Now, I know I promised that I wouldn't talk much about system administration. But if you are going to use the console as a desktop, you still need to know how do do some basic things, like shutting down the system and so on. In the following paragraphs we will therefore consider some basic sysadmin topics. There sections are quite verbose since we are dealing with multiple operating systems, but again, you only need to read whatever is relevant for your setup.

There are various ways to shut down a computer, many systems have halt and reboot for instance. These commands are often just aliases for the shutdown command, which is extremely ubiquitous (even Windows has it for crying out loud!). But it seems like every incarnation of this command behaves a little differently:

One of the popular command line thrills, is to watch colorful streaming text, of presumably important information. The granddaddy monitoring application, is of course top. Which is the closest thing you will come to a taskmanager for the terminal (there is also the slightly more hip variant, htop). This ubiquitous tool can also run in batch mode, which is useful if you need a free command on non-Linux systems: top | grep Mem. The BSD's ship with a very nice system monitoring app in addition, called systat. There are many other monitoring apps available, glances and btop are two examples, but feel free to search your repository for "top" or "mon" to discover more. Personally I don't find these programs all that useful, but they are handy when you want to make a cool screenshot of your terminal, or if you need to pretend that you are doing something important at work ;^)

To check overall disk usage run the df -h command. You can also check how much space a directory uses with du -hs mydir, or recursively check how much space each file in this directory uses with du -ha mydir.

You can quickly check what disks are mounted on your system with mount | column -t, or by checking the systems disk configuration file /etc/fstab. Note that disks are handled very differently on UNIX then on Windows. In Windows each disk is its own top level directory beginning with its drive letter, such as C:\. In UNIX a disk is represented as a file in /dev and is usually called something like sda, and in contrast to Windows it can be mounted anywhere on the filesystem. For example a common configuration is to put the root filesystem, /, on a fast but small SSD harddisk, and have the home partition, /home, on a slower but bigger harddisk. Or to have partitions that change files rapidly, like /tmp or /var, on faster harddisks. Another very useful configuration is to place /tmp directly in memory, we will talk more about that later. The point is that you can arrange the disks anywhere you want in the filetree, and with new filesystems like ZFS, UNIX systems allow for even greater flexibility!

We cannot go into the finer details of every UNIX filesystem, but as an example, lets repartition and reformat a USB memory stick with the ultra archaic and portable DOS filesystem, and mount it:

After attaching the USB stick to your computer, you can run the dmesg | tail command to see if your system has detected it. You should be able to see something similar to this line towards the end:

[ 4198.053323] sd 8:0:0:0: [sdd] Attached SCSI removable disk

Don't worry too much about the details here, what's important is that the kernel detected a removable disk and called it sdd. Armed with this knowledge we can now reformat our disk:

# fdisk /dev/sdd

> p # Print a list of partitions that are on the disk

> d # Delete the partition(s)

> n # Create a new partition (just go with the defaults)

> t # Change the partition type to b - W95 FAT32

> p # List partitions and verify that everything looks correct

> w # Write changes to disk

> q # Quit fdisk

There are more user friendly alternatives to fdisk such as cfdisk, but the classic fdisk command is easy enough once you have used it a couple of times. Even though we have now created a new partition table on our memory stick, we haven't actually created a filesystem on it yet. Assuming the memory stick has only one partition, we can create a fat filesystem like so: mkfs.vfat /dev/sdd1

Now that our memory stick has a freshly reformatted filesystem, how do we use it? Well we need to mount it somewhere on the filesystem. For instance if you run the command mount /dev/sdd1 /home/myuser/Documents, you can access the memory stick from this directory. Of course that's a rather daft place to put it since you then cannot access your documents. So lets place it somewhere else (don't worry, your documents will pop back once we unmount the memory stick):

# umount /home/myuser/Documents

# mkdir -p /mnt/usb

# mount /dev/sdd1 /mnt/usb

And safely remove it by:

# umount /mnt/usb && eject /dev/sdd1

You can check what the usb stick is called with the dmesg | tail command, or just by logging into tty1 and see what the kernel has written there. On FreeBSD/DragonFly the device will be called something like da0 and the first partition is da0s1. On OpenBSD and NetBSD the device will be called something like sd0, and you can check what the partitions are called with disklabel sd0.

The fdisk command is slightly different on all the BSD versions, so read the man page before using it. On FreeBSD/DragonFly and NetBSD you can run fdisk -u <diskname>, and an interactive process will guide you through the partitioning (make sure you choose file type 11 on your FAT partition). In OpenBSD run fdisk -e <diskname> to edit the partition, use file type 0b for your FAT partition.

When all that is done you can use newfs_msdos <diskpart> to create a new FAT 32 filesystem on it (on FreeBSD/DragonFly <diskpart> might be da0s1, on OpenBSD/NetBSD it might be sd0i - check with disklabel).

On FreeBSD/DragonFly mount and safely remove it like so:

# mkdir -p /mnt/usb

# mount -t msdosfs /dev/da0s1 /mnt/usb

# umount /mnt/usb

# sync

On OpenBSD/NetBSD mount and safely remove it like so:

# mkdir -p /mnt/usb

# mount /dev/sd0i /mnt/usb

# umount /mnt/usb

# eject /dev/sd0i

After you have inserted the USB stick, you can look for its device name with the rmformat command. It should produce an output similar to this:

Looking for devices...

1. Logical Node: /dev/rdsk/c0t0d0p0

...

Connected Device: USB DISK 2.0 1219

...

You can now edit the partition table of the disk with fdisk /dev/rdsk/c0t0d0p0. The Illumos/Solaris fdisk program is similar to cfdisk on Linux and is straight forward to use. Just make sure you create a D partition type and specify its size as 100 (assuming you want a single FAT 32 partition on the usb stick).

Finally create a FAT 32 filesystem on this partition with: mkfs -F pcfs -o fat=32 /dev/rdsk/c0t0d0p0:c. The memory stick should automatically be mounted under /media, but you can manually mount it to a different location if you want:

# umount /media/<usbname>

# mkdir -p /mnt/usb

# mount -F pcfs /dev/dsk/c0t0d0p0:c /mnt/usb

And safely remove it by:

# umount /mnt/usb && eject /dev/dsk/c0t0d0p0

PS: The raw disk devices in Illumos/Solaris is under /dev/rdsk. It's this device you use when formatting a disk. When mounting a disk however you use the block device under /dev/dsk.

The archaic FAT filesystem from MS-DOS is awkward to use under the best of circumstances. It cannot store any files larger than 4 Gb, and even worse it has no concept of UNIX file permissions, these vital security settings will be wiped when you copy them over to a FAT memory stick. You can work around the permission problem by bundling your files into a tar archive, but it's a bit awkward.

So why are people still using this rusty old filesystem, aren't there any better alternatives?!? Well, yes and no. A popular choice is the newer Windows filesystem NTFS since it can store files larger than 4 Gb. Of course it too has no concept of UNIX file permissions, so for UNIX users this isn't a good alternative (And even though Linux can use this filesystem, there is considerably less support in the UNIX world for NTFS then FAT). Why not just use a native UNIX filesystem on the memory stick?

You can, and in many ways, it is much simpler. Tools such as mount and newfs for instance will assume a native filesystem by default. And of course you can store large files on it, and keep your permission settings. But there is a catch! Native UNIX filesystems have virtually no support on other operating systems, including different versions of UNIX! If you happen to use only FreeBSD machines at home and at work, then by all means, put UFS on your memory sticks! But don't be surprised when Solaris refuses to mount them, even though it too uses "UFS". The most portable UNIX'y filesystem is probably old versions of the Linux EXT filesystem, such as EXT2 or EXT3. (note that EXT2 might also limit max file size to a few Gb, depending on how it is configured)

For better or worse, DOS was a very simple and hugely popular operating system, so support for its filesystem is ubiquitous. There may not be many good alternatives to FAT, but there are good alternatives to memory sticks, such as sftp, scp or sshfs, which are all part of the ssh suit of programs. Another excellent tool for syncing files across the network is of course rsync. You can also set up permanent network file shares with NFS or Samba. And of course ZFS filesystems allow you to easily share files across the network with other ZFS filesystems.

UNIX systems can use filesystems that reside entirely, or partially, in memory rather then on disk. There are many ways you can use this, but we will only briefly look at how to configure your /etc/fstab to mount /tmp in memory. Bizarrely some UNIX systems don't do this by default, but assuming you have this thing called "RAM", there isn't any reason not to. For one, your /tmp directory will be much faster, and it is convenient for security too. So without further adieu, simply take the line below suited to your system, adapt it to your local needs.

Of course, these are merely suggestions. You may want to use a different size then 1 Gb, and you may want to add or drop some of the mount options here. Btw, DragonFly, NetBSD, Solaris, and a few Linux distros, I am sure, will use a tmpfs /tmp partition by default. PS: edit /etc/vfstab, not /etc/fstab, on Solaris systems.

If you are having permission issues after creating this memory filesystem, try the following commands (if they don't help, make a valiant quest to a Guru on top of a network stack, and plead politely for his wisdom):

# umount /tmp

# chmod 1777 /tmp

# mount /tmp

Battery management is perhaps not a vital concern for your average mainframe, but for laptops on the run, it can be crucial! There are many super advanced power optimization guides out there on the web, but really, the effective techniques are simple enough; Turn off bluetooth/wifi if you don't need it and turn down screen brightness. Tweaking powersaving settings in the BIOS can also help. powertop is a handy tool for finding out exactly what nasty interactive programs are sucking your battery dry. (this is more useful on the desktop then in the console though) Lastly, it is bad practise to constantly overcharge the battery. If you make the habit of charging up the battery to ~80% and detach it when it's not needed, you'll have a decent battery for years.

On Linux you can use the acpi command to check your battery status, light to adjust screen brightness and tlp to optimize power preservation. (eg: light -S 20; sudo tlp start) By default tlp will stop charging the battery when it reaches 80%, which is handy when the brain refuses to learn the habit of pulling out the battery when it's charged.

You can check how much battery life you have left with acpiconf -i 0 | grep 'Remaining capacity' (your battery may have a different numerical ID then 0). Provided the powerd service is running, FreeBSD will try to intelligently reduce power consumption when the system isn't doing much. (I'd recommend powerd_flags="-a hiadaptive -b adaptive") To adjust screen brightness, you can try; kldload acpi_video; sysctl -a | grep brightness, and depending on the output, run a command similar to this: sysctl hw.acpi.video.lcd0.brightness=20. This even works on some hardware.

The apm command in OpenBSD lets you see battery information, and the apmd service will optimize power preservation. (ei. doas rcctl enable apmd) Be sure to check out the apmd(8) man page, it can do some clever things! If you want to set screen brightness to, say 20%, and stop charging the battery when it reaches 80%, you can run: wsconsctl display.brightness=20 ; sysctl hw.battery.chargestop=80

To set screen brightness in NetBSD, run sysctl -a | grep brightness, and depending on the output, run a command similar to: sysctl -w hw.acpi.acpiout0.brightness=40 The apmd service in NetBSD has been superseded by powerd, although it doesn't actually do anything clever. NetBSD uses envstat to list battery and temperature statistics, we can make a simple script to digest this information succinctly.

#!/bin/sh

# battery - print how much battery life is left

# usage: battery

envstat | awk '/charge:/ { gsub(/[()]/,""); print "Remaining:", $6 }'

Solaris/Illumos has a powerd service that tries to optimize power usage. If you can't adjust screen brightness with the keyboard, you likely can't. Retrieving battery information is done with the kstat command, but you need to manually work out what this information means in practice. Here is a simple script that prints remaining battery percentage:

#!/bin/sh

# battery - print how much battery life is left

# usage: battery

lastfull=$(kstat acpi_drv | awk '/last_cap/ { print $2 }')

remaining=$(kstat acpi_drv | awk '/rem_cap/ { print $2 }')

echo $(echo "($remaining * 100) / $lastfull" | bc)% remaining

There is no such thing as a perfectly secure system, rather, it's a question of how much security you need. Installing a shark infested moat around your house may provide good protection against common burglars, but not against paratroopers. Pros and cons must be carefully weighed. Private citizens usually don't need to worry about paratroopers, but government officials might. Is the expense and maintenance worth it? Is the added protection worth the inconvenience of having your clumsy kids accidentally tripping into the moat from time to time? (this absurd analogy is more relevant to system administration - indeed parenting - then you may think) In that vein, we will not talk about over engineered security mechanisms such as ACL, SELinux, PAM, Docker and so on, we will only talk about security basics that all system administrators need to know, and know well. Naturally the importance of system administration is directly proportionate to the number of users on your machine. If you only administrate yourself, the following topics are merely important.

The core security concept in UNIX is managing user and group permissions to files. By setting file permissions you can include or exclude users from collaborating on common projects, from tweaking system configurations, and from running certain programs. Consider the following example:

$ ls -ld $HOME

drwxr-xr-x 81 dan dan 2560 May 26 15:38 /home/dan

$ ls -l /bin/sh

-r-xr-xr-x 3 root bin 617544 Apr 19 18:16 /bin/sh

$ chmod +w /bin/sh

chmod: /bin/sh: Operation not permitted

$ cp /bin/sh $HOME/sh

$ chmod +w $HOME/sh

$ ls -l $HOME/sh

-rwxr-xr-x 1 dan dan 617544 May 26 15:39 /home/dan/sh

The information we are interested in here is the first string of characters after the ls -l commands. Ignoring the very first character, they show us whether or not the file has read, write or execute permissions for the owner, the group and everyone else. /home/dan has owner dan and group dan, with permission rwxr-xr-x. That is, the owner dan has permission to read, write and execute, rwx. The group dan has permission to read and execute, r-x. And so does everyone else, the last r-x. So no one except the file owner in this case can edit this directory. But everyone can look at the files, and indeed make a copy of the files to their directory and freely edit the copies. This last point is illustrated above, where we first tried to give write permissions to /bin/sh (a rather daft thing to do!). This was denied because only the file owner, root, is allowed to do so. Hence we made a private copy of it, and then made the necessary adjustments (naturally the owner of a copy is whoever made the copy). Note the security implications here: Anyone who can read a file, can make a copy of it, and subsequently modify and execute the copy!

UNIX has always been an open share and share alike environment, business practices of AT&T not withstanding, and the default file permissions reflect this ideology somewhat. If we don't want to allow everyone access to snoop and copy our private files, we can simply run this command chmod 750 $HOME. With this set, our files are safe from prying eyes (well, not really - more on that later). If we want to grant other users the exclusive rights to read and copy our files, to work on some common project for instance, we can add them to the dan group. We could also have written the above command like so: chmod o-wx $HOME. This later form is perhaps easier to understand, it reads: for others (ei. everyone else) remove write and execute permissions. If we wrote ug+r, it would mean, for user (ei. the owner) and group give read permission. Often you will see the octal chmod form however, which can be tricky to understand, but you only need to know a handful of common values to get by:

Now running the command chmod 750 $HOME will indeed prevent (almost) anyone from tampering with our home directory, but what about all of the files within this directory? Actually, a 750 permission in $HOME is quite sufficient. But there are situations where it might be desirable to recursively set file permissions throughout a file tree. So lets assume that we want to set a 750 permission for all files and directories under $HOME, we could do so with the following command: chmod -R 750 $HOME. But this is a very sloppy thing to do! The problem is that we don't really want execute permissions for ordinary files, except of course our custom shell scripts and assorted programs in ~/bin, we want a 640 permission on plain files, and only a 750 permission for directories (directories NEED execute permission!). Setting unnecessary execute permissions on plain files will not mean the end of humanity, but it is an uncouth move, and it will earn you newb points! The correct solution would be something more akin to this:

$ find $HOME \( -path "*/bin/*" -prune \) -o \

\( \( -type d -a -exec chmod 750 {} \; \) -o \

\( -type f -a -exec chmod 640 {} \; \) \)

It's OK to admit that the above command scares you. We could simplify this operation by doing it in stages, and actually, it's fine if you just lazily type chmod -R in the privacy of your own home. I will not tell on you. Sloppiness isn't really a major issue unless you are working professionally as a sysadmin. If that is the case, the above command is nothing less then job security. Just make sure you run this whenever your boss is looking over your shoulder.

To illustrate user and group management, we create a new user, bob, a new group, manhat, assign Bob to this group, set the new user and group as owners for some files in /var/manhattan, and lastly make these files secret for anyone outside of the Manhattan project:

# adduser # bob

# groupadd manhat

# usermod -a -G manhat bob

# chown -R bob:manhat /var/manhattan

# chmod 770 /var/manhattan

# adduser # bob

# pw groupadd manhat

# pw groupmod manhat -m bob

# chown -R bob:manhat /var/manhattan

# chmod 770 /var/manhattan

# adduser # bob

# groupadd manhat

# usermod -G manhat bob

# chown -R bob:manhat /var/manhattan

# chmod 770 /var/manhattan

# useradd -m bob

# groupadd manhat

# usermod -G manhat bob

# chown -R bob:manhat /var/manhattan

# chmod 770 /var/manhattan

# useradd -m bob

# groupadd manhat

# groupmod manhat -U +bob # Oracle Solaris

# usermod -G manhat bob # Illumos

# chown -R bob:manhat /var/manhattan

# chmod 770 /var/manhattan

As you can see, there is a disturbing amount of variation in user management across UNIX systems (chown and chmod however work the same). And these differences are somewhat incompatible. For instance usermod -G manhat bob is fine in OpenBSD and NetBSD, but that command will nuke Bob's membership in any group besides manhat in Linux and Solaris, whereas FreeBSD will, "thankfully", only give you a not found error. It would also have been nice if we could call our group manhattan, but surprisingly enough, some Unixes (eg. Solaris) cannot handle long group names. But I suppose our little Manhattan project illustrate that system administration, often involves a level of surprise, pain, embarrassment, and occasional atrocities.

Beware that there are other, more subtle, peculiarities between these systems as well. BSD's have the unique concept of login classes, and Solaris has a thing about "profiles", for instance. As always, the sysadmins primary task is to read the manpages carefully!

PS: It is possible to adjust user and group settings by hacking the files in /etc/passwd and /etc/group as root, and in the heyday of AT&T UNIX this was in fact the only way to add new users to the system. But this kind of Wild West approach is considered bad practice today, however inconsistent and ugly, its still better to use dedicated sysadmin tools for such tasks.

It turns out that our top secret Manhattan project above isn't quite as secure as we may think. You see, UNIX systems have an all-powerful user called root. The root user can do anything, no restrictions whatsoever (of course the root user can set restrictions on himself, but he can also rescind them). This concept is a lot more powerful then a "system administrator", that you will see on some non-UNIX'y systems. The root user is not only able to configure the system any way he wants, but he can engage in deep brain surgery and wanton destruction as he pleases. In fact, anytime you see root in a UNIX context, replace it with the LORD, and you will get the right idea. Beyond file encryption it is impossible to hide anything from root. Windows users may find this disconcerting, but in actuality they have the exact same issue, it's just that their "root" user is an unknown, plausibly evil, corporate entity called Microsoft (MacOS and Android (and Ubuntu for that matter), being UNIX after all, have a real root account - but like Microsoft, they try very hard to hide this fact from their users).

You can switch to the root user by typing the command su (or su - to simulate a full login), followed by the root password. By the way, you can use this command to switch to any user you have the password for, eg. su bob. It goes without saying that your user passwords should be a well guarded secret. But the root password is something you do not share even with your closest confidant! To quote Micah 7:5:

«Trust ye not in a friend, put ye not confidence in a guide: keep the doors of thy mouth from her that lieth in thy bosom.»

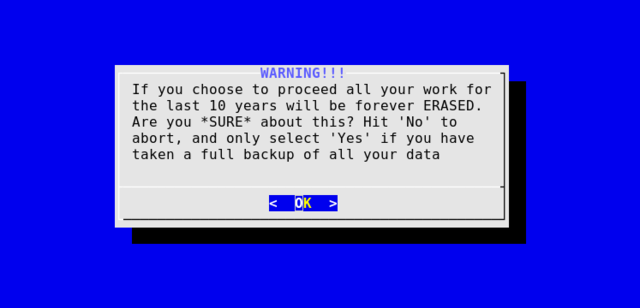

With careful group management, it is quite possible to delegate system administration tasks to users without giving them root access. Users working on a web server may be part of the www group, in order to work on files in /var/www for instance. If these users also need to run some database, or other web server maintenance program, the sysadmin can just run chgrp www on these programs, and set permissions to 550, in order to allow www users to run them, but no one else. But root is so powerful, that the system administrator himself should not use it, if it can be avoided. To illustrate the danger, suppose you meant to type rm -rf $HOME/* but mistyped it: rm -rf $HIME/*. Congratulations! You have now deleted all files on your computer.

Another, more subtle example: Suppose you are working in /var/www/mysite, and decide to delete the whole mess in an act of righteous indignation: rm -rf ../* Permission denied! Oh really, you think smugly to yourself, and do a full root login with: su -, then rm -rf ../*. Congratulations! You have now deleted all files on your computer (do you see why?). The point? Don't use root! This is where sudo comes into play. In it's simplest form, you can edit /etc/sudoers, and uncomment the line that says #%wheel ALL=(ALL) ALL, that is, remove the # sign. Then make sure that your user is a part of the wheel group, eg. usermod -a -G wheel myuser in Linux (some distros use sudo instead of wheel here).

PS: Whenever you need to run some dangerous command, it's a good idea to check first with echo. For instance, the two disastrous file munching commands mentioned above, could have easily been averted with a simple sanity check: echo rm -rf ...

With this in place, you can run sudo command, to execute this one command "as root". That still makes you disturbingly powerful of course, but it is marginally better then monkeying about blindly as the Almighty root, at the very least it makes you open up the briefcase before you hit the big red button. sudo also logs any commands executed by it. So assuming the computer survives the attempt, you will know who to blame when the dust settles. The true beauty of sudo however, is that it allows you do define very fine grained privileges. The above line could have been bob 192.168.0.12 = (operator) /bin/kill, /usr/bin/lprm, for instance. Meaning that the user bob can run the commands kill and lprm on the machine 192.168.0.12, but only as the operator user (eg. sudo -u operator kill). You can define aliases for users and commands and such, in order to administrate things further. If we defined Host_Alias CSNETS = 192.168.0.12, 128.138.204.0/24, 128.138.242.0 for example, we could have written CSNETS instead of 192.168.0.12, to allow bob access to this list of machines.

PS: Not all variants of UNIX come with sudo by default, and some have their own sudo'y kind of commands (eg. pfexec in Solaris and doas in OpenBSD). But all UNIX systems have sudo in their repositories at least.

About a thousand years ago, when Vikings roamed the sea and pillaged unsuspecting monasteries, people used telnet to login to their remote UNIX machines. It was truly a barbaric time when "security" was largely an unknown concept. Today of course we use ssh to connect safely, and civilly, to our remote boxes. But simply having ssh will not magically make the world around you safe. You need to use this tool correctly. In a word, this means: disable password authentication.

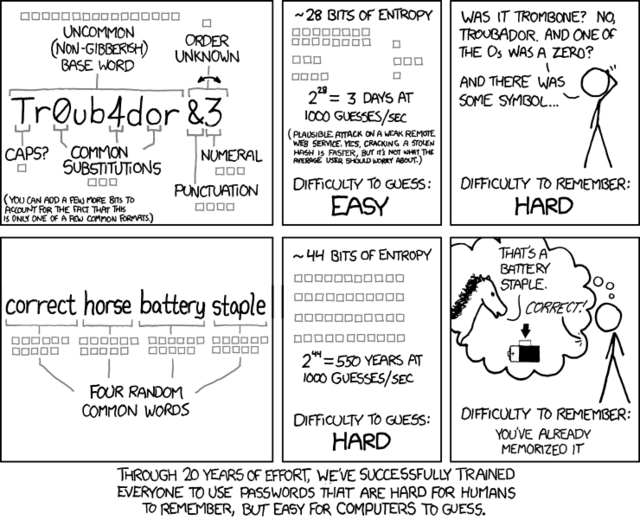

By default, an ssh server will accept a password login from anyone who has an account on the machine. Naturally this has to be the default, it would be impossible to set up a remote server otherwise. But the very first thing you should do on your shiny new server, is to set up public key authentication and then DISABLE password authentication! Passwords are a good way to protect your laptop from benevolent coworkers, it is NOT a safe way to protect your server out there on the hostile internet. There exists a network of bots on the web, dubbed the "Hail Mary Cloud", that will systematically probe any ssh server it can find. This cloud will try to login to your server a few times from one IP address, then try again with another IP, and so on and so on... If you allow password authentication on your remote ssh server, it will get compromised, not if, but when.

The answer to this problem is public keys. To understand why, we can use a simple analogy: Suppose you have set up a secret Pirate club in downtown New York, with a big flashy neon sign that says "Secret Password Required Upon Entry!" Well, daily you'll be pestered with kids trying to guess the password, and eventually one of them will get it right, before long your top secret Pirate den is full to the brim with script kiddies. The point? Use keys. Anyone who now wants to sneak into your club, must first accost a Pirate and steal his key. That can still happen, but you are likely safe from kids at least. Naturally, the bouncer should still ask for a secret password, for added security against such an event. To create an ssh key on your laptop run ssh-keygen. Once this is done, copy your key over to the server with: cat ~/.ssh/id_rsa.pub | ssh myserver 'cat >>~/.ssh/authorized_keys'. When you now log on to your server with ssh, it should say: Enter passphrase for key.... When you have verified in this way that your key is working, go ahead and disable password authentication on your ssh server. Edit /etc/ssh/sshd_config, and change these options:

ChallengeResponseAuthentication no

PasswordAuthentication no

PubkeyAuthentication yes